ACRONYM LIST

| Acronym | Meaning |

|---|---|

| CBA | Cost Benefit Analysis |

| CP | Condition Precedent |

| DQR | Data Quality Review |

| EIF | Entry into Force |

| EMC | Evaluation Management Committee |

| ERR | Economic Rate of Return |

| ITT | Indicator Tracking Table |

| KPI | Key Performance Indicator |

| M&E | Monitoring & Evaluation |

| MCC | Millennium Challenge Corporation |

| NARA | National Archives Records Administration |

| QDRP | Quarterly Disbursement Request Package |

| RCM | Resident Country Mission |

| RDA | Results Definition Assessment |

| TREDD | Transparent, Reproducible, and Ethical Data and Documentation |

| USC | United States Code |

PURPOSE

The Millennium Challenge Corporation’s (MCC) Monitoring and Evaluation (M&E) Policy is designed to help MCC and its partner countries measure the results of its programs. It builds on the mandate in MCC’s founding statute to define and measure specific results and is predicated on the principles of accountability, transparency, and learning.1This policy is intended to help MCC staff and partner countries work together to uphold MCC’s commitment to evidence-based decision-making to improve development effectiveness.

SCOPE

This policy sets forth requirements for and governs the monitoring and evaluation of all MCC compact and threshold programs. It seeks to establish the roles, responsibilities, and key expectations regarding the design, conduct, dissemination, and use of monitoring and evaluation at MCC.Unless otherwise noted, all requirements apply to both compact and threshold programs.

Existing M&E Plans and evaluations with Evaluation Design Reports approved by MCC prior to the effective date of this policy are not required to comply with its provisions, provided that any revisions to such plans or reports approved by MCC on or after the effective date of this policy should strive to comply with this policy to the extent compliance is feasible and cost-effective.

AUTHORITIES

Acts

Section 609(b)(1)(C) of the Millennium Challenge Act of 2003, as amended (MCA Act)Foreign Aid Transparency and Accountability Act of 2016 (Pub. L. No. 114-191, 130 Stat. 66 (2016))

Related MCC Policies and Guidelines

- Federal Records Management Policy (AF-2007-27, internal, August 2020)

- Compact Development Guidance (https://www.mcc.gov/resources/pub-pdf/guidance-compact-development-guidance, February 2017)

- Cost Benefit Analysis Guidelines (https://www.mcc.gov/resources/doc/cost-benefit-analysis-guidelines, June 2021)

- Economist/M&E Specialist Position Description (internal, March 2018)

- Evaluation Management Guidance (https://www.mcc.gov/resources/doc/guidance-evaluation-management, August 2020)

- Evaluation Question Guidance (internal, February 2022)

- Evaluation Risk Assessment checklist (internal, updated annually)

- Gender Policy (https://www.mcc.gov/resources/doc/gender-policy, May 2012)

- Guidance for Creating a Completeness Index (internal, March 2020)

- M&E Plan Guidance (internal, June 2022)

- Guidance on Common Indicators (https://www.mcc.gov/resources/doc/guidance-on-common-indicators, September 2021)

- Guidelines for Economic and Beneficiary Analysis (https://www.mcc.gov/resources/story/story-cdg-guidelines-for-economic-and-beneficiary-analysis, March 2017)

- Guidelines on Transparent, Reproducible, and Ethical Data and Documentation (TREDD, https://www.mcc.gov/resources/doc/guidance-mcc-guidelines-tredd, March 2020)

- Inclusion and Gender Strategy (https://www.mcc.gov/resources/doc/inclusion-gender-strategy, October 2022)

- Indicator Tracking Table Guidance (https://www.mcc.gov/resources/doc/guidance-on-the-indicator-tracking-table, July 2021)

- MCC Guidance to Accountable Entities on the Quarterly Disbursement Request Package (https://www.mcc.gov/resources/doc/quarterly-mca-disbursement-request-and-reporting-package, July 2020)

- Policy on the Approval of Modifications to MCC Compact Programs (internal, February 2012)

- Results Definition Assessment (forthcoming)

- Terms of Reference for Data Review Consultancy (internal, April 2018)

- Template for the Program Monitoring & Evaluation Framework (internal, September 2017)

- MCC Guidance to Accountable Entities on Technical Reviews and No-Objections (https://www.mcc.gov/resources/doc/guidance-mcc-guidance-to-accountable-entities-on-technical-reviews-and-no-objections, February 2022)

- MCC Guidance on Processing Accountable Entity Requests for No-Objection (internal, February 2021)

KEY DEFINITIONS

When used in this policy, the following terms, whether capitalized or not, have the respective meanings given to them below.Accountable — Obliged or willing to accept responsibility or to account for the achievement of targeted results of a program.

Accountable Entity (AE) — The entity designated by the government of the country receiving assistance from MCC to oversee and manage implementation of the compact or threshold program on behalf of the government. The Accountable Entity is often referred to as the Millennium Challenge Account or MCA.

Activity — Actions taken or work performed through which inputs, such as funds, technical assistance, and other types of resources are mobilized to produce specific outputs. Typically, multiple activities make up one project and work together to meet the associated Project Objective.

Actual — A data point that shows what has been completed, as opposed to a number that is a target or a prediction.

Attribute — To show that a change in a particular outcome was caused by an intervention or set of interventions.

Baseline — The value of an indicator prior to a development intervention, against which progress can be assessed or comparisons made.

Beneficiary2 — An individual who is expected to experience better standards of living as a result of the project through higher real incomes.

Beneficiary Analysis3 — An analysis that describes the expected project impact on the poor and other important demographic groups. It answers three basic inter-related questions: Beneficiaries: How many people are expected to benefit from increased household incomes as a result of the project, and what proportion of them is poor? The Magnitude of Benefits: How much, on average, will each individual beneficiary gain from the project? Cost Effectiveness: For each dollar of MCC funds invested, how much will be gained by the poor?

Common Indicator — An indicator for which MCC has a standardized definition in MCC’s Guidance on Common Indicators. Common indicators allow for consistent measurement and reporting across programs and enable aggregate reporting where relevant.

Compact — The agreement known as a Millennium Challenge Compact, entered into between the United States of America, acting through MCC, and a country, pursuant to which MCC provides assistance to the country under Section 605(a) of the MCA Act.

Compact Program — A group of projects implemented together using funding provided by MCC under a Compact.

Completeness Index — A measure that MCC uses to assess the extent to which proposed activities have been defined in measurable terms. Part of the forthcoming Results Definition Assessment.

Cost Benefit Analysis (CBA)4 — An analysis that models the economic logic of a proposed project in quantitative terms by estimating the economic benefits that can be directly attributed to the proposed project and comparing them to the project’s costs over a set period of time.

Counterfactual — The scenario which hypothetically would have occurred for targeted individuals or groups had there been no project.

Country Team — A multidisciplinary team of MCC staff that manages the development and implementation of a compact or threshold program in coordination with their Accountable Entity counterparts.

Data Quality Review (DQR) — A mechanism to assess the quality and utility of performance information.

Disclosure Review Board (DRB)5 — The administrative body established by MCC in 2013 to (i) develop, review, and approve guidelines and procedures (including modifications thereto) for data activities; (ii) review and approve proposals related to data disclosure; and (iii) notify the MCC Incident Response team in the event of an identified, specific disclosure risk (spill, breach, etc.) and follow MCC protocol for risk management.

Economic Rate of Return (ERR)6 — A CBA summary metric that captures the rate at which economic resources invested in a project deliver economic benefits to society. This CBA summary metric is good for comparison across projects that may vary significantly in size.

Entry into Force (EIF) — The point in time when a compact or threshold program agreement comes into full legal force and effect and its term begins.

Evaluation — is defined in Section 5.1.

Evaluation Brief — A brief document created with each independent evaluation report, summarizing the key results and lessons learned from the evaluation.

Evaluation Management Committee (EMC) — Committee of MCC staff established early in program development to make critical decisions about a particular independent evaluation throughout the evaluation’s duration.

Evaluation Risk Assessment — Internal process led by the MCC M&E team to identify and manage risks preventing unbiased, rigorous, ethical, and meaningful analyses by independent evaluators of the results of MCC’s investments for accountability, learning, and transparency.

Exposure Period — The period of time a participant needs to be exposed to an intervention (“treatment”) to reasonably expect to observe the intended impact on outcome(s). Also referred to as the “timeline for results.” See “treatment” definition below.

Federal Record — All books, papers, maps, photographs, machine readable materials, or other documentary materials, regardless of physical form or characteristics, made or received by an agency of the United States Government under Federal law or in connection with the transaction of public business and preserved or appropriate for preservation by that agency or its legitimate successor as evidence of the organization, functions, policies, decisions, procedures, operations or other activities of the United States Government or because of the informational value of the data in them. (44 USC §3301). Records include such items created or maintained by a United States Government contractor, licensee, certificate holder, or grantee that are subject to the sponsoring agency’s control under the terms of the entity’s agreement with the agency.

Final Evaluation — The report assessing a project logic’s final targeted results. If more than one evaluation report was prepared, it is the last report.

Impact Evaluation (IE) — is defined in Section 6.4.2.

Implementing Entity — Any government affiliate engaged by the Accountable Entity to play a role in achieving the Project Objectives as defined in the compact or threshold program agreement.

Indicator — A quantitative or qualitative variable that provides a simple and reliable means to measure achievement of a targeted result.

Indicator Input — A component of a composite indicator, such as a percentage or ratio. The most common examples of indicator inputs are the numerator and denominator used to calculate a rate or percentage indicator.

Indicator Tracking Table (ITT) — A quarterly reporting tool that tracks progress on the indicators specified in a country’s M&E Plan that are available for regular reporting towards program targets. It is submitted as part of each Quarterly Disbursement Request Package.

Interim Evaluation — An assessment of a project logic’s targeted results that takes place before the final evaluation and measures results earlier in the logic than the Project Objective.

Investment Management Committee (IMC) — Committee of MCC senior staff members that advises MCC’s Chief Executive Officer on policy matters, including investment decisions.

Investment Memo — An internal memorandum produced by MCC staff that presents project recommendations for IMC review and approval by MCC’s Chief Executive Officer.

Key Performance Indicator (KPI) — An indicator selected from the M&E Plan that is reported quarterly in the Table of Key Performance Indicators. The indicators are selected yearly by the country teams to best reflect the current state of the program.

M&E Plan — is defined in Section 6.3.1.

M&E Policy Results Definition Standard — is defined in Section 6.2.4.2.

MCA Act — is defined in Section 3.1.

MCC Evidence Platform — MCC’s external, web-based platform for sharing the data and documentation produced by MCC-funded evaluations and other studies.

MCC Learning — Document detailing the practical lessons identified by MCC staff, based on an interim or final evaluation report, to be applied going forward.

Monitoring — is defined in Section 5.1.

Outcome — The targeted results of an intervention’s outputs.

Outcome Indicator — An indicator that measures a targeted result of an intervention’s outputs.

Output — The goods or services produced as the direct result of the expenditure of program funds.

Output Indicator — An indicator that measures the goods or services produced as the direct result of the expenditure of program funds.

Participant — An individual who takes part in an MCC-funded project.

Performance Evaluation — is defined in Section 6.4.2

Process Indicator — An indicator that measures progress toward the completion of an activity, a step toward the achievement of project outputs and serves as a way to ensure the work plan is proceeding on time.

Program — A Compact Program or Threshold Program, as applicable.

Program Agreement — A Compact, Threshold Program Grant Agreement, or both as the context may require.

Program Monitoring & Evaluation Framework — Refers to the section of the compact or threshold agreement that includes an overview of the plan for monitoring and evaluating the projects funded under such agreement, including the project logics, indicators, and targets defined at the time the agreement is signed.

Project — A group of Activities implemented together to achieve a Project Objective.

Project Logic — is defined in Section 6.1.1. Also called “program logic” or “theory of change” in general project design terminology, MCC uses the term “project logic” because each MCC-funded project is designed to achieve a stated Project Objective.

Project Objective — The primary outcome that a project intends to achieve to be considered successful. The Project Objective is stated in the program agreement (compact or threshold) in Section 1.2 under the heading “Project Objectives.”

Result — An output or outcome.

Results Indicator — An indicator that measures an output or outcome.

Risk/Assumption Indicator — An indicator that measures a risk or assumption in the project logic.

Target — The expected value for a particular indicator at a particular time.

Threshold Program — A group of projects implemented together using funding provided by MCC under a Threshold Program Agreement.

Threshold Program Agreement — The grant agreement entered into between the United States of America, acting through MCC, and a country, pursuant to which MCC provides assistance to such country under Section 616 of the MCA Act to assist the country in becoming eligible for a compact program.

Treatment — The interventions that program participants and/or people that are expected to benefit are exposed to. Treatment is defined differently depending on the project. In a teacher training project, for example, treatment may be defined as training and so exposure to treatment begins the first day of training. In an electricity infrastructure project, treatment may be defined as energized new grid infrastructure and so exposure to treatment begins the day the grid is energized.

INTRODUCTION

Background

The Millennium Challenge Corporation is an independent U.S. government foreign assistance agency with a mission to reduce poverty through economic growth in the countries that receive its assistance. It is an evidence-based institution that executes policy and investment decisions based on available empirical evidence, development theory, and international best practices.MCC’s model is based on a set of core principles essential for effective development assistance — good governance, country ownership, a focus on results, and transparency.7 To operationalize MCC’s focus on results, project leads and other MCC and partner country team members collaborate to design and manage for results, while monitoring and evaluation (M&E) staff measure results and produce evidence on the effectiveness of MCC’s investments that will inform future agency decisions.

Drawing from these core agency-wide principles, MCC’s M&E approach as reflected in this policy’s requirements seeks to embody three principles: accountability, learning, and transparency.

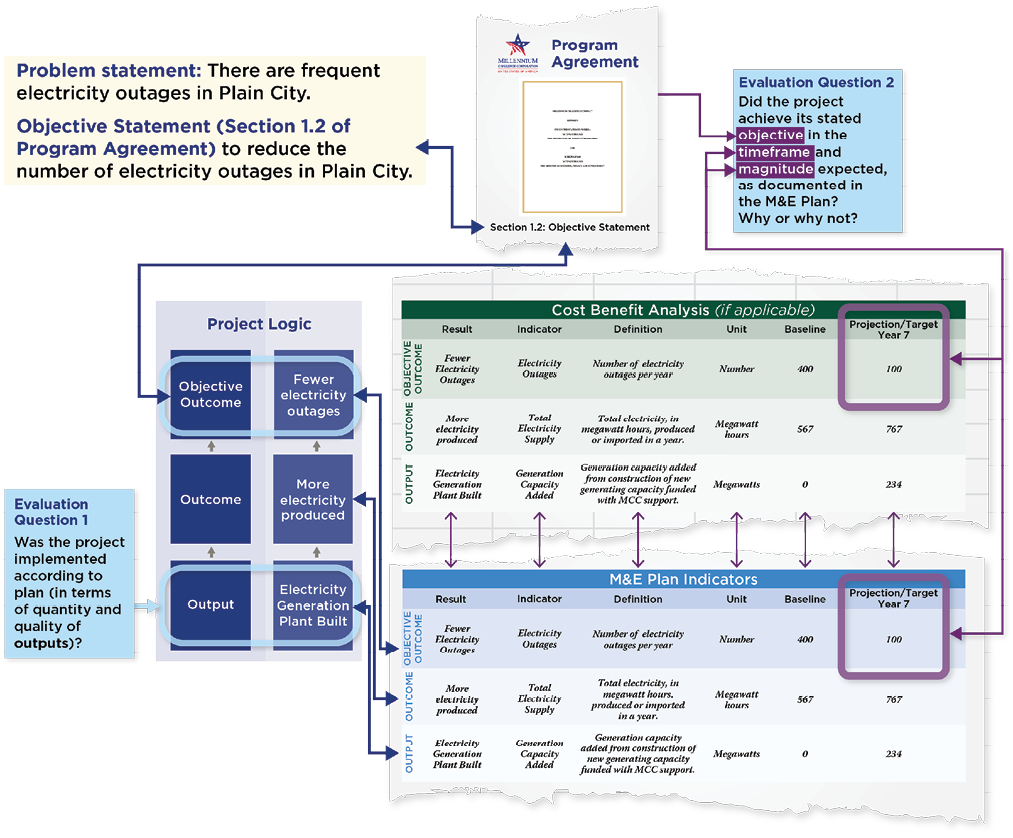

To this end, MCC asks the following questions with respect to each project that it funds:

- To what extent was the project implemented according to plan (in terms of quantity and quality of outputs)?

- Did the project achieve its stated objective in the timeframe and magnitude expected, as documented in the current M&E Plan? Why or why not?

Monitoring is the continuous, systematic collection of data on specified indicators to measure progress toward Project Objectives and the achievement of intermediate results along the way.

Evaluation is the systematic collection and analysis of information about the characteristics and outcomes of a project.8

Monitoring provides timely, high-quality data to understand whether the project is proceeding as planned, on schedule, and following the logic of the project. MCC and its partner countries are expected to use this data throughout the life of the program to assist in making decisions about the program’s implementation.

Although effective monitoring is necessary for program management, it is not sufficient for assessing whether the expected results of an intervention are achieved. MCC therefore uses evaluations to understand the effectiveness of its programs. MCC and its partner countries are expected to use evaluation results to inform decision-making on future programs.9

MCC M&E Principles

The requirements set forth in this policy seek to embody the following core principles:Accountability

MCC, as a publicly financed entity, holds itself and its partner countries accountable by determining whether a project achieved its intended objective. MCC’s authorizing legislation requires compacts to state “the specific objectives that the country and the United States expect to achieve during the term of the \[C]ompact;” and “regular benchmarks to measure, where appropriate, progress toward achieving such objectives.”10Learning

MCC is committed to learning why a project did or did not achieve its stated objective and using these findings for decision-making regarding the design, implementation, analysis, and measurement of current and future interventions.Transparency

MCC is committed to releasing publicly as much information as feasible regarding the monitoring and evaluation of its programs. MCC’s website is routinely updated with the most recent monitoring information. Partner country entities, referred to as the Accountable Entity, are encouraged to do the same on their respective websites. For each evaluation, all associated results, reports, data collection materials, de-identified data (to the extent practicable) and lessons learned are posted.POLICY

Overview of Results at MCC11

This section describes MCC’s results framework, explaining how the various parts of project design are later used for M&E and describing the project characteristics required to conduct high quality monitoring and evaluation consistent with MCC’s evidence-based model. If a project’s design does not have these characteristics at the time of the investment decision and MCC proceeds with the investment, the quality of M&E will be reduced. This section enables country teams to identify any necessary project characteristics that are missing during the design phase, ensuring the investment decision is made with full awareness of the implications of the adequacy of project design on the feasibility of conducting high quality M&E.Note that each MCC project is evaluated. Therefore, MCC M&E is structured at the project level. A compact or threshold program may consist of multiple projects.

MCC’s Project Results Framework

(Terms in bold are defined in section 4. Key Definitions)The Project Objective as it is stated in Section 1.2 of the program agreement is the focal point for a project’s results framework. It is the project’s definition of success. MCC and partner countries define an outcome indicator(s) in the M&E Plan to measure the project objective and success.

The project logic exists first as a theory held by the project designers.12 It is their cause-and-effect logic of how the expenditure of funds will achieve a project’s objective. It may change over time, as the context of the project changes or is better understood. This logic is then documented in the project description and an associated diagram. This diagram is the basis of all MCC M&E. Each targeted result of a project is represented as a box in the diagram: the expenditure of project funds produces outputs, which then lead to outcomes and eventually the Project Objective.

Where required, a cost benefit analysis (CBA) is conducted. The project’s CBA uses the same fundamental project logic. It also includes assumptions on results’ expected timeframes and magnitudes.

For each result in the project logic, an indicator is developed to measure it and documented in the M&E Plan. For each results indicator, a baseline and a target are documented.13 Where a result in the project logic is also included in the CBA, the definition of the associated indicator and its baseline and target should be drawn from the CBA that informs the investment decision. Indicator definitions, baselines, and targets for results not included in a CBA are drawn from the studies that inform project design.

Each MCC project is independently evaluated. The focus of the evaluation is to determine if the project achieved the Project Objective, and why or why not. MCC’s core evaluation questions, tailored to the project, set the scope of the project evaluation. Each question links to specified results in the project logic. The indicators tied to these results will answer the evaluation question.

Figure 1: Summary of the MCC Results Framework

Project Objective

The Project Objective is the definition of success envisioned and agreed upon by the project designers. It is found in Section 1.2 of the program agreement.To conduct high quality monitoring and evaluation, the Project Objective should ideally have the following characteristics:

- be the inverse of the core problem identified by the project designers

- can be logically attributed to the proposed set of interventions based on evidence and literature

- is clearly specified with one indicator to comprehensively measure it; if one indicator cannot comprehensively measure the statement, it is specified in as few indicators as possible.

- its indicator(s) should have a baseline and target, demonstrating it can be represented in measurable terms, and be disaggregated for subpopulations targeted by the project14

- its indicator(s) should be agreed on by all project designers

- is achievable within a reasonable period following completion of the program

- can be measured cost-effectively through an independent evaluation

- is aligned with the benefit streams modeled in the CBA, such that each indicator used to measure it is the same indicator representing benefits in the CBA and the timeline for achievement matches the CBA model. This does not apply to results that are not modelled in the CBA.

Project Logic

The project logic demonstrates how the expenditure of funding will achieve the Project Objective.To conduct high quality monitoring and evaluation, the project logic diagram should ideally have the following characteristics:

- a statement of the problem the project is designed to improve

- a results chain with sufficient detail15 about the mechanism through which the Project Objective will be achieved, i.e., how planned outputs will lead to desired outcomes and ultimately result in the Project Objective

- a plausible theory based on existing evidence and literature

- key risks/assumptions to achieving results

- a timeline for achievement of the Project Objective, which matches the timeline in the CBA, if one exists.

- all results chains lead to the Project Objective

- the diagram stops at the Project Objective

- matches the fundamental logic of the CBA, if one exists

- agreed on by all project designers

- each result stated separately in the diagram

- only one project logic diagram (for M&E purposes) for each project

- clearly marked in the diagram:

- the result that reflects the Project Objective, stated verbatim from the program agreement

- results linked to the CBA, if one exists

- whom or what each result is intended for

- the relevant project/activity/sub-activity nomenclature as written in the program agreement

- arrows showing the causal chain leading to the Project Objective

Indicators

An indicator is developed to track each result in the project logic. The Accountable Entity/MCC M&E team uses these indicators to track the validity of the theory of change. MCC M&E distinguishes between four indicator levels: outcome, output, process, and risk/assumption. “Results indicators” refers to both output and outcome indicators.To conduct high quality monitoring and evaluation, results indicators should ideally have the following characteristics:

- Adequate: Each result in the project logic has one indicator to comprehensively measure it. If one indicator cannot comprehensively measure the result, as few indicators as possible should be specified.

- Direct: An indicator should measure as closely as possible the result it is intended to measure.

- Unambiguous: The definition of an indicator should be operationally precise, such that it can be consistently measured across time and by different data collectors. There should be no ambiguity about what is being measured, how to calculate it, what/who the sample is, or how to interpret the results.

- Practical: Data for an indicator should be realistically obtainable in a timely way and at a reasonable cost.

- Useful: Indicators selected for inclusion in the M&E Plan, including common indicators where appropriate, must be useful for management and oversight of the program.

- Specific: Who or what the data will be collected on is explicit and matches the CBA (if applicable). For example, which roads, which pipes, which households, or which people are expected to benefit.

- Aligned with CBA: There is a matching definition between the indicator and the CBA for results that are common to both the CBA and the project logic.

- Measurable: There is a baseline value (unless inappropriate to establish due to the structure of the metric, such as a date indicator).

- Targeted: There is a final target value, in all cases, and yearly target values, where feasible and appropriate, for each year after the associated intervention is expected to begin yielding measurable results.

- Appropriately disaggregated: Disaggregations and their inputs are specified, if necessary and relevant to the project logic, with associated baselines and targets.

Baselines

Baselines serve a critical purpose in project design. They are evidence that the problem to be solved by the intervention exists and show the magnitude of that problem. Without this evidence, it is not clear that there is a problem to be solved and that an intervention is necessary. Moreover, if the problem has not been sufficiently identified, it is not possible to show success.Baselines should be captured as close as possible to but before the time an intervention is expected to yield measurable results. For this reason, if a long time passes between intervention design and treatment, an updated baseline may be required for monitoring and evaluation purposes.

Intervention design decisions may need to be based on data with known quality or availability concerns because it is the best information available at the time. The baseline may be updated if better data becomes available.

To conduct high quality monitoring and evaluation, baselines should ideally have the following characteristics:

- established during project design

- updated, if necessary, prior to when treatment begins (as close as feasible to this point)

- sourced from the studies that inform project design or administrative data provided by the partner country, or established as zero or non-existent by the indicator definition (e.g., date indicators)

Targets

A target is an expected value for a particular indicator at a particular time. For learning and accountability to be possible, it is important to document targets ex-ante and then compare them to what is achieved. At MCC, targets are set primarily in the CBA that informs the investment decision and project design documentation.Targets are not required for indicators other than results indicators, such as for indicators that are monitoring a risk or assumption, though they may still be helpful. Additionally, targets are not necessary for program years before an intervention that the indicator is measuring is expected to begin.

To conduct high quality monitoring and evaluation, targets should ideally have the following characteristics:

- timing: be at least as long as the required exposure period

- this will likely fall post-program for many outcome indicators

- timing must be drawn from the CBA that informs the investment decision, if one exists

- value: match expected magnitude of the indicator at that time in:

- the CBA that informs the investment decision, if applicable

- project design documentation, if the indicator is not modelled in the CBA

- established before the intervention begins

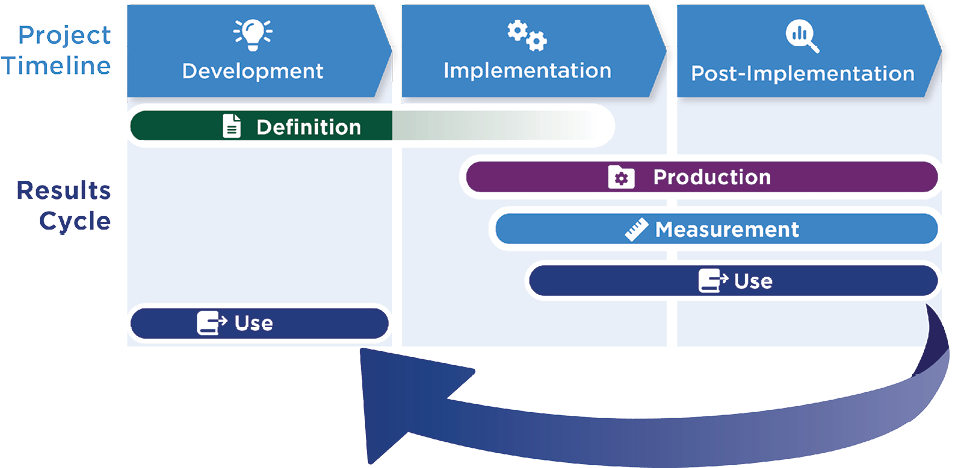

MCC’s Results Lifecycle

The MCC Results Framework is implemented through a cycle with four distinct phases: Definition, Production, Measurement and Use. These phases are described in detail below.Definition

The definition of results occurs during project design. It is the articulation and documentation of ex-ante expectations: precisely what the project expects to achieve described in measurable terms, how it will achieve it, when it will achieve it, and the magnitude of change it will achieve.The MCC project lead and the team economist, together with their partner country counterparts, articulate their definition of project success, in the form of the Project Objective statement. They then articulate the project logic explaining their rationale for the investment, with the support and agreement of relevant members of the Country Team, as well as partner country stakeholders.

Where required, the team’s economist conducts a CBA that aligns with this project logic to estimate the proposed project’s economic rate of return (ERR). M&E works with the team designing the program, especially the MCC project lead and the economist, and their partner country counterparts to define indicators associated with each result in the project logic to accurately capture what the project intends to achieve.

Production

Program implementation begins with the expenditure of funding after EIF and initiates the chain of events theorized in the project logic. MCC’s Country Team, the Accountable Entity, and Implementing Entities work together to use funding as planned and manage towards results. If implementation goes as planned, the expected outputs are produced. Either concurrent with or up to several years after the start of program implementation (depending on the intervention), treatment will begin. If the project logic holds, expected outcomes are then produced.Measurement

This phase of the results lifecycle is M&E’s primary responsibility. Once program implementation and the chain reaction of results begins, it is time to test the project logic. M&E tracks the project’s progress along the project logic. Actual results are measured by the indicator linked to each result in the project logic and progress is assessed by comparing actual results to targets. Indicator values are reported to the Accountable Entity management team, sometimes by an Implementing Entity, sometimes by another institution or directly from Accountable Entity staff. The Accountable Entity then reports the indicator values to MCC in the Indicator Tracking Table (ITT) as part of the Quarterly Disbursement Request Package (QDRP).An independent evaluator is procured by MCC M&E at the appropriate time. Once hired, the independent evaluator produces an Evaluation Design Report that is reviewed by the Evaluation Management Committee, the Accountable Entity, and other partner country stakeholders. The timing of final data collection for the evaluation is based on when the Project Objective was expected to be meaningfully achieved. This timing is typically after the end of the program. Data collection includes an appropriate-sized sample to detect the expected magnitude of effect at that time, as documented in the associated indicator’s target. The independent evaluator produces a final evaluation report using this data to answer the evaluation questions. An interim report may also be produced at an earlier stage to measure interim results.

Use

Data reported in the ITT is reviewed quarterly by the partner country and MCC. It is used to flag any risks to achieving the expected results of the project.Once an interim or final evaluation report is produced, MCC M&E packages the results in different formats to optimize accessibility of this learning to decision-makers at MCC, in partner countries, and in global development organizations. Relevant staff from MCC and the partner countries reflect on the findings and document lessons learned. Finally, the MCC M&E team leads various dissemination efforts, including presentations and compilations of evidence by sector. When developing new projects or making key decisions about current projects, MCC and its partner countries use evaluation findings to inform their decisions.

Figure 2: Program Timeline and the Results Lifecycle

Timing for Individual Interventions

Projects are sometimes made up of separable interventions. It is important to note that each separable intervention in a compact or threshold project moves through the results cycle individually. Interventions may begin the “Production” stage at different times, depending on when implementation starts and what the exposure period is.While it is best practice that interventions are fully defined before the decision is made to invest in them, that is not always the case in reality. It is possible that some investments in a compact or threshold program are at the “Production” stage while some investments remain at the “Definition” stage, even after program signing.

The “Measurement” and “Use” stages are dictated by the exposure period for the Project Objective, the final measurement of which is typically a couple of years after the end of the program. Intermediate outcomes may enter Measurement before, but not after, the Project Objective. Use will come when ITTs are submitted and at the conclusion of the evaluation.

Identifying Gaps in Project Design (and therefore the Results Framework)

If there are gaps in project design, the ability to conduct monitoring and evaluation to fulfill the principles of accountability and learning will be limited. Oftentimes, these gaps can be corrected once they are identified. To identify critical gaps in the results framework that might lead to less-than-optimal M&E, MCC can ask the following questions about its projects ahead of making an investment decision. These questions will be assessed through the Results Definition Assessment (RDA) at the time of the Investment Memo.16- Does the Project Objective meet all the characteristics stated in Section 6.1.1.1 Project Objective above?

- Does the project logic meet all the characteristics stated in Section 6.1.1.2 Project Logic above?

- Does each result in the project logic have a defined indicator, with a baseline and target?

- Do these indicators, baselines and targets meet the characteristics stated in Sections 6.1.1.3 Indicators, 6.1.1.4 Baselines, and 6.1.1.5 Targets above?

This will help country teams and their partner country counterparts identify gaps in project design, prioritize amongst them, and communicate the M&E implications of any gaps that cannot be solved.

Summary of Roles and Responsibilities in the Results Lifecycle

| MCC | Partner Country | Evaluator | |||||

|---|---|---|---|---|---|---|---|

| M&E Lead | Economist | Project Lead(s) and Other Team Members, as appropriate | Evaluation Management Committee | Program Development Team/ Accountable Entity/Post-Program Point of Contact | Implementing Entities | Independent Evaluator | |

|

Definition |

Presents relevant evidence from MCC and other interventions to inform results definition. | Not applicable | Develops project concepts.

Collaborates with MCC to articulate the Project Objective and project logic. Develops M&E Plan, including defining indicators and targets, collaboratively with MCC. Ensures M&E Plan is up to date. |

Supports partner country program development team in development of project concepts, Project Objective, project logic, and M&E Plan. | Not applicable | ||

| Works collaboratively with each other and with the partner country to articulate the Project Objective and project logic. Primary responsibility belongs to the Project Lead. | |||||||

| Works collaboratively with each other to conduct CBA aligned with the project logic, where required. Primary responsibility belongs to the Economist. | |||||||

| Works collaboratively with each other and the partner country to define indicators associated with the project logic for the M&E Plan, ensuring the precise definition captures what the Project Lead envisions and matches what is in the CBA. Primary responsibility belongs to the M&E Lead. | |||||||

| Works collaboratively with each other and the partner country to ensure M&E Plan targets match the project design and the forecasts in the CBA. Primary responsibility belongs to the Project Lead, except responsibility for the CBA belongs to the Economist. | |||||||

|

Production |

Not applicable | Not applicable | Manages towards results | Not applicable | Manages towards results | Manages towards results | Not applicable |

|

Measurement |

Procures and manages an independent evaluator.

Supports Accountable Entity M&E. Strives to ensure quality of ITT reporting. |

Participates as a member of the EMC. Reviews the ITT for accuracy. | Participates as a member of the EMC. Reviews the ITT for accuracy. | Reviews evaluation deliverables. | Reports indicators to MCC in the ITT. Reviews evaluation deliverables. | Reports indicators to Accountable Entity.

Reviews evaluation deliverables. |

Produces evaluation deliverables. |

|

Use |

Flags for the country team any risks to results found in ITT data.

Packages results for learning and leads dissemination efforts to inform decisions. Uses evaluation findings to improve future evaluation design. |

Flags for the country team any risks to the ERR found in ITT data. Uses evaluation findings to inform economic analysis. | Uses ITT data to inform decisions.

Uses evaluation findings to inform decisions. |

Reflects on evaluation findings and documents lessons. | Conducts same responsibilities as MCC counterparts. | Uses evaluation findings to inform decisions. | Conducts evaluation results dissemination events. |

Program Development

Once MCC’s Board of Directors selects a country as eligible to develop a compact or threshold program, the country can engage with MCC and initiate the development process. In developing a program with MCC, selected countries typically follow a process that includes these five phases: (1) Preliminary Analysis, (2) Problem Diagnosis, (3) Project Definition, (4) Project Development, and (5) Negotiations. More information on program development and the five phases can be found in MCC’s Compact Development Guidance. Currently, there are three major milestones: Phases 1-3 culminate in the Concept Definition Memo IMC. Phase 4 culminates in the Investment Memo IMC. Phase 5 culminates in the signing of the program agreement.Throughout these phases, MCC strives to develop logical and evidence-based programs that are well-documented, likely to deliver their intended benefits, allow accurate assessment of the achievement of targeted results, and provide useful learning. As explained in Section 6.1 Overview of Results at MCC, MCC’s ability to conduct high quality M&E relies on the project design. MCC M&E staff participate in the program development process to help the team identify gaps in project design that can then be corrected, resulting in a stronger project that is more likely to achieve its Objective and facilitate high quality M&E. MCC M&E’s specific role in each phase is discussed below.

Phase 1: Preliminary Analysis

During the Preliminary Analysis phase, MCC and the selected country designate teams to work together to identify the country’s most binding constraints to the growth of the economy and the reduction of poverty.M&E staff are typically not involved during this phase.

Phase 2: Problem Diagnosis

This phase of development focuses on defining a core problem that a future project will aim to solve.M&E staff join this phase to bring a results measurement lens to the project designers’ work, in support of a high-quality future M&E Plan. While there are no specific M&E deliverables in this phase, M&E staff should actively engage in team discussions to facilitate M&E work in subsequent stages of project development.

- M&E staff will participate in, but not lead, team discussions and workshops related to root cause analysis. M&E staff will advocate for an analysis based on evidence, bringing in learning from evaluations of past MCC projects as appropriate.

- M&E staff will participate as the project designers collaborate to draft a well-defined problem statement. M&E staff will advocate for a problem statement that is supported by data and that can be quantified, as it will be used to inform the Project Objective (the inverse of the problem).

- M&E staff will advocate for a Project Objective that meets the criteria set forth in Section 6.1.1.1 Project Objective, to facilitate high-quality M&E of each project.

- If the Project Objectives lack the necessary characteristics to facilitate high-quality M&E as documented in Section 6.1.1 Project Objective, this should be communicated to the country team and partner country, and documented by MCC M&E staff at management touchpoints and in any memorandum produced and submitted to the Investment Management Committee at this phase of development.

Phase 3: Project Definition17

This phase of development seeks to further define the targeted results of the proposed project (e.g., preliminary identification of the activities to be funded and the key targeted outcomes and refinement of the Project Objective).M&E staff continue to apply a results measurement lens to the project designers’ work, in support of a high-quality future M&E Plan. Specific M&E staff responsibilities during this stage of development are described below.

- Based on the project lead and economist’s written description of the preliminary project logic, M&E staff will work with the project designers to produce a project logic diagram that will serve as the basis for the future M&E Plan. The written description of the logic put forth by the project lead and the economist should describe the results targeted by the current project design and the benefit streams expected to be modeled in the CBA (if one is required) such that it can be translated into a logic diagram.

- M&E staff will engage with the project designers to identify key indicators to measure targeted results, including relevant common indicators.

- M&E staff will conduct an initial quality review of the data sources required (see Section 6.5.3.1 Pre-Implementation DQR).

- M&E staff will ensure that tasks related to assessing data availability and quality are incorporated in due diligence contracts, feasibility studies, and other project design contracts.

- If the Project Objective or preliminary project logic lack the characteristics necessary for high quality M&E as documented in Section 6.1.1 Overview of Results at MCC, this should be communicated to the country team and partner country and documented by MCC M&E staff at management touchpoints and in any memorandum produced and submitted to the Investment Management Committee at this phase of development.

Phase 4: Project Development

Each project and its targeted results should be fully defined in this development phase.MCC M&E Staff Role

M&E staff lead the development of the Program Monitoring and Evaluation Framework (as captured in the M&E annexes to the Investment Memo). Specific M&E responsibilities during this stage of development are described below.- Once the project lead and the economist have a detailed written description of the project logic, M&E staff will work with the project designers to update the project logic diagram. The project logic diagram should be developed in line with the characteristics described in Section 6.1.1.2 Project Logic.

- M&E staff will collaborate with the project designers to ensure that the diagram reflects the project logic described in the project description and CBA (if one exists) sections of the Investment Memo. If these sections conflict, M&E staff will prioritize the project description and will document the lack of alignment in the annexes to the Investment Memo.

- M&E staff will work with the project lead, economist, and other project designers to identify an appropriate indicator to measure each output- and outcome-level result included in the project logic diagram and to draw baselines and targets from appropriately documented sources (e.g., feasibility studies, due diligence reports, CBA).

- The M&E annexes of the Investment Memo, when taken together, should contain the information required to meet the M&E Policy Results Definition Standard described below and facilitate high-quality M&E.

- If a lack of project clarity or definition prevents the development of a Program Monitoring and Evaluation Framework that meets the M&E Policy Results Definition Standard, this should be communicated to the project designers and documented by MCC M&E staff at management touchpoints and in the Investment Memo.

M&E Policy Results Definition Standard

The Program Monitoring & Evaluation Framework set forth in the Investment Memo must contain the following (the “M&E Policy Results Definition Standard”) to satisfy the M&E Policy and meet the MCC investment criterion of “Includes clear metrics for measuring results of projects”. This Standard is assessed as part of the Results Definition Assessment:- Indicators to comprehensively measure the Project Objective, with their definitions, baselines and targets;

- Outcome indicators that overlap between the CBA (if one exists) and project logic, with their definitions, baselines, and targets;

- Output indicators currently identified as critical to achieving the Project Objective, with their definitions, baselines, and targets.

- These indicators (listed in #1-3 above) should be based on a project logic diagram that contains the characteristics described in Section 6.1.1.2 Project Logic.

- These indicators (listed in #1-3 above) and their components should follow the characteristics described in Section 6.1.1.1 Project Objective, Section 6.1.1.3 Indicators, Section 6.1.1.4 Baselines, and Section 6.1.1.5 Targets.

Phase 5: Negotiations

During the Negotiation phase, MCC drafts, negotiates, finalizes, and signs the program agreement with the selected partner country.Based on the Investment Memo, M&E staff draft the Program M&E Framework for inclusion in the program agreement. If project design changes between the time the Investment Memo is presented to MCC management and the drafting of the program agreement, M&E staff ensures those changes are reflected in the Program M&E Framework. Substantive changes between the Investment Memo and the program agreement should be flagged to MCC M&E management.

All compact and threshold program agreements must include the Program M&E Framework, which provides a description of MCC and the partner country’s agreed preliminary approach for monitoring and evaluating the program. While modifications to this approach may be made in accordance with this policy during the development of the initial M&E Plan, the Program M&E Framework should provide as complete a picture as possible of the program’s results framework. Specifically, the Program M&E Framework in the program agreement must include:

- A project logic diagram that includes the results to be measured and should have the characteristics described in Section 6.1.1.2 Project Logic with the Project Objective clearly denoted;

- A summary of the CBA and estimated ERR (when available) that informs the definition of results;18

- The number of expected beneficiaries by project, to the extent applicable, defined in accordance with MCC’s Cost Benefit Analysis Guidelines;19

- Indicators to comprehensively measure the Project Objective, with their definitions, baselines, and targets;

- All additional outcome indicators that overlap between the CBA (if one exists) and project logic, with their definitions, baselines, and targets;

- All output indicators currently identified as critical to achieving the Project Objective, with their definitions, baselines, and targets;20

- General requirements for data collection, reporting, and data quality reviews;

- The specific requirements for evaluation of every project and a brief description of the proposed methods that will be used;

- Requirements for the implementation of the M&E Plan, including Accountable Entity responsibilities; and

- A timeline for any results that are expected after the end of the program, noting the commitment for both parties to continue the evaluation of results beyond the end of the program.

Guidance and standard language for the Program M&E Framework is contained in the internal Template for the Program M&E Framework.

M&E Role on Partner Country Teams in Program Development

To support the program development phases described above, partner country program development teams should include an Economist/M&E Specialist21 who works with MCC and other partner country project designers to support the development of projects that are clearly related to the constraints to economic growth, address the core problems underlying the constraint(s), have a clear and complete project logic and results framework that meets the requirements in this policy, and meet MCC’s requirements for economic and beneficiary analysis.In addition to the M&E responsibilities described under the program development phases above, the Economist/M&E Specialist will work closely with other partner country project designers to assess the capacity of stakeholders to implement data collection and reporting, lead discussions on the prospective evaluation strategy, and develop an M&E budget. As the program moves towards implementation, the partner country Economist/M&E Specialist will prepare or train implementing entities on their expected M&E responsibilities under the program.

For additional guidance on the M&E role on partner country program development teams, see the internal standard Economist/M&E Specialist Position Description.

Program Implementation

This section describes MCC and Accountable Entity M&E staff responsibilities during program implementation, apart from those related to independent evaluation and data quality, which are addressed in Sections 6.4 Independent Evaluation and 6.5 Data Quality, respectively.Implementation responsibilities are shared between MCC and the Accountable Entity M&E team. The Accountable Entity M&E team is largely responsible for monitoring program progress. The Accountable Entity M&E team is required to prepare the initial M&E Plan and update it on a regular basis, report regularly against the M&E Plan, verify the quality of indicator data, and manage the M&E budget. During implementation, the Accountable Entity M&E team is also responsible for supporting the independent evaluations by identifying users of the evaluation, building local ownership and commitment to the evaluation, overseeing the work of the data collection firm, and performing quality reviews of evaluation products.

The MCC M&E team is responsible for oversight of the independent evaluator, including quality control of evaluation deliverables, and providing high-level oversight of the Accountable Entity monitoring activities, including striving to ensure the quality of ITT reporting. The remainder of the MCC country team and Accountable Entity staff are responsible for supporting the development of the M&E Plan and quarterly ITT, and regularly reviewing independent evaluation deliverables and other M&E materials.

The Monitoring and Evaluation Plan

After a compact or threshold program agreement is signed, the Accountable Entity and MCC must finalize an M&E Plan that provides a detailed framework for monitoring and evaluating the program. The M&E Plan is a required document detailing a program’s approach to monitoring, evaluating, and assessing progress towards and achievement of Project Objectives. The M&E Plan is used in conjunction with other documents such as work plans, procurement and grant Plan packages, and financial plans to provide oversight for program implementation and to strive to ensure the program is on track to achieve its intended results. The M&E Plan also serves as a communications tool, so that Accountable Entity staff and other stakeholders clearly understand the results the Accountable Entity is responsible for achieving.M&E Plan Contents

There are four primary components of each M&E Plan:- The Program and Project Objective Overview presents the program description including the results that need to be measured under the M&E Plan. At a minimum, it includes:

- Each Project Objective.

- A clear logic diagram for each project.

- A summary of all CBAs (if applicable), which presents the economic rationale for MCC investments, and beneficiary analysis, which estimates the distribution of program benefits.

- The Monitoring Component of the M&E Plan identifies the monitoring and data quality assessment strategies and documents the reporting plan to monitor progress against targets during program implementation. This component consists of:

- A summary of the strategy for monitoring program implementation and a description of standard requirements for reporting progress against targets to MCC, the Accountable Entity, and local stakeholders. The monitoring strategy will also include a summary of the plan for assessing data quality.

- Plans to fill data gaps, if applicable. If MCC, its partner countries, or a third-party identify gaps in data availability or data quality that limit the ability to measure results, best efforts to fill these gaps should be covered in the planning of monitoring activities and their associated costs for the program implementation period and beyond. These efforts may be documented in the M&E Plan.

- The Evaluation Component identifies evaluations that will be conducted and presents the plan for each. For each project, this section must include the proposed evaluation type (impact, performance, or multiple), evaluation questions, methodology, planned data sources, and proposed timelines for data collection and analysis. The independent evaluation plan can be developed in stages for each project as it is designed and implemented.

- The Indicator Annexes document all indicators, including their baselines, targets, and data sources, to assess program progress, and changes to indicators over time. This is presented in 3 annexes: Annex I: “Indicator Documentation Table” (comprised of the process, output, outcome and risk/assumption indicators that track the project logic), Annex II: “Table of Indicator Baselines and Targets,” and Annex III: “Modifications to the M&E Plan.”

- Annexes I and II document the program’s plan for regular data collection, oversight, and reporting during program implementation and the evaluation period, including the indicators, their definitions, the planned data source, the responsible party for each indicator, reporting frequency, and baseline and target values. These annexes must include indicators that are required to be reported to MCC on a regular basis (i.e., those in the ITT) and all indicators that are expected to be measured through an independent evaluation.

- Annex III documents major modifications to indicators and the project logic during the program’s term.

- A description of the roles and responsibilities for the implementation and management of M&E activities. This section describes the role of the Accountable Entity M&E staff as well as post-program responsibilities of the partner country. As evaluation does not end when the program’s term ends, the M&E Plan must delineate post-program responsibilities. In general, MCC continues to be responsible for managing the independent evaluator and the partner country continues to facilitate evaluation work, including providing input into evaluation deliverables. Both MCC and the partner country are responsible for disseminating evaluation results and using them for future project design.

- The full budget for all planned M&E activities in the program (including MCC-contracted independent evaluations), identifying which of those activities will be funded by the program agreement and which will be funded directly by MCC.

Indicators in the M&E Plan

All M&E indicators must be documented in Annex I and II of the M&E Plan. Annex III will document any changes to M&E indicators or project logic diagrams over time. Annex I will distinguish between indicators that are expected to be reported on regularly during the life of the program and indicators that will only be measured during distinct periods as part of an independent evaluation.As mentioned above in Section 6.2.4 Project Development, the project logic diagram for each project in the M&E Plan should meet the characteristics found in Section 6.1.1.2 Project Logic. An indicator should be mapped to each result in the diagrams. If there is not yet enough clarity to define an indicator for each result in the project logic, a placeholder is required. If it does not and will not make sense to define an indicator for a result, that must also be documented. The indicators should, to the extent feasible, follow the indicator characteristics described in Section 6.1.1.1 Project Objective, Section 6.1.1.3 Indicators, Section 6.1.1.4 Baselines, and Section 6.1.1.5 Targets.

Outcome indicators that are not part of the results pathway leading to the Project Objective should not be included in the M&E Plan (i.e., each outcome indicator should map to a result in the project logic that leads to the Project Objective). Key outputs, even if outside the project logic, may be tracked. Any risk/assumption indicators that are included in the annexes must correspond to a critical risk or assumption in the project logic. A small number of process indicators may also be included in the annexes. All relevant common indicators should be included, including common indicator inputs and disaggregations, if data are expected to be available (see Section 6.3.3.2 Common Indicators).

M&E indicators must be kept to the minimum necessary, consistent with the adequacy principle defined in Section 6.1.1.3 Indicators. Accountable Entities must not supplement the M&E Plan with indicators that are unlinked to the project logic, that overburden the Accountable Entity’s M&E staff, are too costly or complex to monitor in light of M&E resources or fail to be used by the relevant MCC or Accountable Entity staff.

It is expected that any outcome indicators planned for inclusion in the ITT will be sourced from administrative data or implementers’ reports. Primary data collected on outcome indicators will otherwise be planned and managed within the context of the independent evaluation.

The Accountable Entity may set quarterly targets for internal management purposes, but MCC only utilizes annual targets for reporting in the ITT.

Indicator Disaggregations and Inputs

The M&E Plan will specify which indicators are disaggregated by distinct subsets, such as sex or age. Baselines and targets are not usually required for disaggregations; however, when progress on a disaggregation is critical to a program’s success as described in the project logic, e.g., results for women for an intervention focused on women, targets may be required. Additionally, in cases where the CBA directly and explicitly links performance to disaggregation-specific outcomes, targets for the disaggregated indicators will likewise be required and established.Some indicators are composites of multiple inputs, such as percentages, ratios, and averages. For transparency and to ensure accuracy in reporting, indicator inputs for composite indicators must be included in the M&E Plan and ITT whenever feasible. In the instances where data for inputs are not available, this requirement may be waived with the approval of the MCC M&E lead on the Country Team. An explanation for why input data cannot be provided must be documented in the M&E Plan.

Common Indicators

MCC uses common indicators to consistently measure progress across programs in key sectors and report those results to internal and external stakeholders. The Guidance on Common Indicators defines common indicators for each sector, including the indicators’ definitions, levels (i.e., outcome, output, process, or risk/assumption), units, standard disaggregations and inputs, and additional information.Each Accountable Entity must include common indicators in its M&E Plan when the indicators are relevant to that program’s activities and when collecting data would not be too costly or infeasible. The MCC M&E lead assigned to the program will specify which indicators are considered relevant. When including a common indicator in the M&E Plan, the Accountable Entity must include the indicator name, definition, and other information exactly as it appears in the guidance. Disaggregated data and indicator inputs of common indicators should also be reported to MCC when relevant, feasible, and as specified in the guidance.

Developing the Initial M&E Plan

A program’s initial M&E Plan is developed based on the Program M&E Framework from the compact or threshold program agreement, updated to incorporate additional details newly available after the agreement signing. Specific timing for finalizing the initial M&E Plan, which is usually no later than 180 days after a program’s entry into force, is set forth in the program agreement. The Accountable Entity Board of Directors (or appropriate partner country representative) must be in place before the initial M&E Plan can be finalized.Responsibility for developing the M&E Plan lies with the Accountable Entity M&E Director, with support and input from MCC’s M&E team and economist, with the exception of the Evaluation section and the Economic and Beneficiary Analyses sections of the Program and Project Objective Overview, which are the responsibility of the MCC M&E Lead and economist, respectively.

Senior leaders of the Accountable Entity, partner country stakeholders, the MCC Resident Country Mission (RCM), and other relevant staff within MCC and the Accountable Entity must assist with the development of the M&E Plan. MCC and Accountable Entity staff are expected to guide indicator selection for results that do not yet have an associated indicator, the refinement of indicator information, and review of targets.

The initial M&E Plan must receive MCC No Objection and be approved by the Accountable Entity Board of Directors (or appropriate partner country representative).22

If it is necessary to make changes between the Program M&E Framework and the initial M&E Plan, these modifications must be documented according to Section 6.3.6 Documenting Major Modifications, when appropriate. Additional details on the requirements for drafting and approving M&E Plans can be found in MCC’s M&E Plan Guidance.

M&E Plan Peer Review

The M&E Plan will undergo a peer review within MCC before the beginning of the formal approval process. More details on the peer review process can be found in the M&E Plan Guidance.Revising the M&E Plan

Once finalized, the M&E Plan may be reviewed and revised at any time, as determined by the Accountable Entity and MCC M&E team. Motivations for a revision include: to ensure the plan remains up to date and consistent with design documents and project work plans, and incorporate lessons learned for improved performance monitoring and measurement. The M&E Plan must be kept as current as possible, including conducting revisions as needed and feasible. At a minimum, one comprehensive review of the M&E Plan, with related updates and revisions, must take place during the life of the program.Any such revision must be consistent with the requirements of the compact or threshold program agreement, and any relevant supplemental agreements. The M&E Plan may be modified without amending the Program M&E Framework or the program agreement. However, M&E Plans must be reviewed and amended, if appropriate, after a modification to the program’s scope program has been approved by MCC in accordance with its Policy on the Approval of Modifications to MCC Compact Programs. MCC may withhold disbursement of program funding if the M&E Plan is not being kept up to date.

Major and Minor M&E Plan Revisions

MCC M&E distinguishes between major and minor modifications to the M&E Plan and major and minor M&E Plan revisions. Major modifications are limited to changes to the project logics, baselines, targets, and indicator definitions, adding new indicators and retiring existing indicators. All other modifications are considered minor.A major M&E Plan revision is defined as a revision that includes one or more major modifications. Minor M&E Plan revisions are those that only include minor modifications.

All major M&E Plan revisions must follow the same approval process as an initial M&E Plan. Additional details on the review and approval processes for major and minor M&E Plan revisions are summarized in the M&E Plan Guidance.

Major Modifications

Major modifications may be made to M&E indicators or a project logic diagram. Those major modifications, as well as a justification for why the change was made (for changes to indicators only), must be documented in Annex III of the M&E Plan. Annex III summarizes all major modifications between program signing and the current version of the M&E Plan. Minor modifications are not required to be tracked in Annex III. Major modifications may only be made to an M&E Plan for the specific reasons summarized below. Additional context on when each justification may be applied can be found in the M&E Plan Guidance.Major Modification Justifications

Major modifications in the M&E Plan include:- A new indicator may be created.

- An existing indicator may be retired.

- An indicator baseline, target, or definition may be updated.

- A change is made to the project logic.

Creating Indicators

An indicator may only be created for one of the following reasons:- Change to the project as a result of an official program modification that results in a new indicator being relevant.23

- Existing indicators do not sufficiently measure project logic.

- MCC requires a new common indicator be used for measurement across all projects of a certain type.

Retiring Indicators

An indicator may only be retired for the following reasons:- Irrelevant due to change in the project as a result of an official program modification.

- Irrelevant to the project logic.

- Cost of data collection for indicator outweighs usefulness.

- Indicator quality is determined poorer than initially thought when included in the plan.

Modifying Baselines

Baselines may only be modified under the following circumstances:- Change to the project as a result of an official program modification that results in a new baseline being relevant.

- More accurate information emerges.

- Corrections to erroneous data.

- TBD replaced with baseline.

- TBD replaced with no baseline.

Modifying Targets

Targets for process indicators may be modified when the implementation plan is updated; however, modifications to all targets should be kept to a minimum.Targets for outcome and output indicators may only be modified as follows:

- For indicators that are not linked to the CBA, their targets may only be modified under the following circumstances:

- An official program modification requiring the approval of a vice president, the CEO or the MCC Board under the Approval of Modifications to MCC Compact Programs policy.24

- Baseline change.

- Occurrence of exogenous factors.

- Corrections to erroneous data.

- TBD replaced with target.

- TBD replaced with no target.

- Outcomes only: Preserve the exposure period.

- Targets for indicators linked to the CBA may only be modified for the following reasons:

- Change to align with a CBA update. A “CBA update” only includes: 1. updates to the CBA made during the time between the Investment Memo being submitted to the Investment Management Committee and 90 days after the EIF date for the program, or 2. after 90 days after the EIF date for the program, updates to the CBA that are part of an official program modification requiring the approval of a vice president, the CEO or the MCC Board under the Policy on the Approval of Modifications to MCC Compact Programs.

Modifying a Definition

Indicator definitions may only be modified in the following circumstances:- Additional clarity is required to accurately measure or interpret an indicator.

- MCC requires a new common indicator definition.

- Correction of spelling or formatting error.

Modifying the Project Logic Diagram

Project logic diagrams may be modified when new context or learning requires a change in results or linkages. Specific justifications for changes to project logic diagrams are not required; however, all historical versions of the diagrams must be included in Annex III to the M&E Plan so that changes to the diagrams can be tracked over time. The project logic should be reviewed when a program modification is made.Modifying the Independent Evaluation Design

Changes to components of the MCC Results Framework resulting in modifications to the project logic and/or indicators may necessitate adjustments to the measurement plans of independent evaluations that are already underway. These changes should be made according to the Evaluation Management Guidance, but are not required to be documented in Annex III to the M&E Plan.Reporting Performance Against the M&E Plan

The ITT is a reporting tool that serves as the primary mechanism for monitoring progress of a compact or threshold program. Following approval of the initial M&E Plan, Accountable Entities must submit the ITT to MCC on a quarterly basis as part of the QDRP for compact or threshold program funds. No changes to indicators, baselines, or targets may be made in the ITT until the changes have been approved in the M&E Plan.All indicators from the Indicator Annexes of the most recently approved M&E Plan that are expected to be reported by the Accountable Entity are included in the ITT and should be reported on per the frequency specified in that plan (typically quarterly, semi-annually, or annually). As part of each compact or threshold program QDRP submission, the Accountable Entity, with oversight from the MCC M&E team, is responsible for updating the current and previous quarter’s data and must report progress against targets, verifying that all historical data are accurate, and providing source documentation and supplementary information for each of the newly reported or updated actuals in the ITT. Additionally, indicators that are identified in the M&E Plan as being disaggregated by sex, age, or another disaggregation type should be reported on a disaggregated basis in the ITT.

Indicators that are not reported regularly through the ITT are reported as part of evaluation reports.

Additional guidance on reporting is contained in MCC’s Guidance to Accountable Entities on the Quarterly Disbursement Request Package and the Indicator Tracking Table Guidance.

Closeout ITT

The Closeout ITT is the final ITT submitted by the Accountable Entity and is considered the official source for monitoring results after a program ends. The Closeout ITT provides a quarterly snapshot of progress made and outputs completed for ITT indicators between EIF and the program end date. It should not include progress attained for any indicator after the program end date. All closeout reports produced by MCC or the Accountable Entity that report on program outputs specified in the M&E Plan must use the Closeout ITT as the data source.Dissemination and Use of ITT Data

Monitoring results from the ITT are used by Accountable Entities and MCC to track implementation progress, inform decision-making, and for reporting. Indicator actuals and progress should be reviewed regularly by the Accountable Entity, its Board of Directors (or appropriate partner country representative), the MCC country team, and MCC management to ascertain to what extent the program is on track to achieve its intended results. ITT data should be considered in making project management decisions and are included in documentation reviewed by MCC management during periodic portfolio reviews. When significant delays or failure to achieve targets is apparent in the ITT, appropriate mitigation measures should be considered to redirect the program towards achieving its intended results. The EMC should also be familiar with critical indicators in the ITT to determine whether adjustments to the evaluation plan are necessary.For transparency purposes, MCC generally considers all monitoring data as approved for public reporting. Additionally, a variety of standard reports typically focus on a subset of monitoring data, such as common indicators25 or Key Performance Indicators (KPIs) and are published quarterly. Finally, MCC regularly contributes to inter-agency reporting focused on United States government initiatives and makes complete ITT data available through ITT data exports.

Should a concern about data quality or other risks arise that cannot be resolved during the QDRP formal review, the Accountable Entity and MCC M&E Lead must closely consider appropriate mitigation measures. Requests not to publish data should be rare and will be considered on a case-by-case basis by MCC’s Managing Director for M&E.

M&E Budget

MCC dedicates funding to implement each country’s M&E Plan. This includes funding included in the compact or threshold program agreement budget for M&E activities and other MCC funding for independent evaluations. The M&E budget section of the M&E Plan must include the full budget for M&E activities, including post-program M&E activities (some of which may be required to be funded by the partner country), and identify which source of funding will be used. M&E-related expenses usually account for approximately 3% of the program value.Within the program, M&E funds may not be reallocated to other projects without prior agreement from the program’s MCC M&E Lead and appropriate MCC approval through the QDRP approval process.

Independent Evaluation

While good program monitoring is essential for program management, it is not sufficient for assessing the achievement of expected project results. Therefore, MCC uses evaluations as a tool to better understand the effectiveness of its projects. Evaluation is the systematic collection and analysis of information about the characteristics and outcomes of a project.26 Detailed guidelines and standards for the preparation, review, and dissemination of evaluations are set forth in MCC’s Evaluation Management Guidance.Evaluation Requirements

Every project in a compact or threshold program must undergo an independent evaluation to assess whether it achieved its stated objective, unless MCC determines, at its discretion, that the cost of evaluating a project outweighs the accountability and learning benefits. It is conducted by parties independent of project design and implementation. It uses rigorous and ethical methods to produce unbiased analysis that is meaningful for decision-makers.27MCC evaluations are summative. A summative evaluation is a data driven means to assess a project’s effectiveness at achieving targeted results after its conclusion. The questions that guide the focus of MCC evaluations are referred to as “summative evaluation questions.” Each MCC evaluation must answer two summative evaluation questions regarding project effectiveness28:

1. To what extent was the project implemented according to plan (in terms of quantity and quality of outputs)?

This question acknowledges there are often changes during implementation. It ensures the evaluation understands what was actually implemented, as compared to what was planned.

2. Did the project achieve its stated objective in the timeframe and magnitude expected, as documented in the current M&E Plan? Why or why not?