Overview

The Millennium Challenge Corporation (MCC) invests in independent evaluations[[Hereafter, the term “evaluation” can be assumed to mean “independent evaluation”, i.e. an evaluation conducted by a 3rd party that is independent of MCC.]] to measure results of MCC-financed and Millennium Challenge Account (MCA)-executed programs. The independent evaluations are conducted by third-party experts (Independent Evaluators[[Hereafter, the term “evaluator” can be assumed to mean “independent evaluator”, i.e. the 3rd party that MCC has commissioned to conduct an independent evaluation.]]) to produce high quality, credible analysis. Independent evaluations hold MCC and country partners accountable for whether or not intended results of the program occurred and contribute directly to learning the reasons such results were or were not achieved for future decision-making. The aim is for independent evaluations to be summative in nature – meaning to measure results and identify learning that can inform future project decisions and expected results. Therefore, at a minimum, each evaluation is guided by the following questions:- Was the program implemented according to plan (in terms of quantity and quality of outputs)?

- Did the program achieve its targeted outcomes, particularly its stated objective, in the timeframe and magnitude expected? Why or why not?

- Do the results of the program justify the allocation of resources towards it?

Defining Evaluation Nomenclature

MCC’s Monitoring and Evaluation (M&E) Policy defines evaluation as the systematic and objective assessment of the design, implementation, and results of an Activity, Project or Program. MCC contracts Independent Evaluators to assess the validity and/or achievement of a program’s logic or hypothesized theory of change. The M&E Policy defines a project as a group of activities implemented together to achieve an objective. Given this, there should generally be one evaluation per MCC project to assess whether the objective was achieved and the reasons for success or failure. When projects include activities that are not credibly linked to one another or the project objective, it may be necessary to conduct multiple evaluations of the differing program logics underlying a project (e.g. multiple Activity level evaluations). When deciding how many different evaluations are warranted, consider the following:- If multiple Projects have a common program logic, they should be considered for ONE comprehensive Evaluation.

- If multiple Activities within a Project have a common program logic, they should be considered for ONE comprehensive evaluation.

- If there are Projects/Activities that are targeting the same high-level outcomes (like income) but are targeting different beneficiaries, in different geographic areas, at different timeframes, MCC and the evaluator should carefully consider if this calls for ONE comprehensive evaluation or separate, individual evaluations.

- Within ONE comprehensive evaluation, the evaluator may utilize MULTIPLE evaluation methodologies (impact and/or performance) depending on the Project/Activities rules of implementation.

One Evaluation requires one Evaluation Management Committee (EMC), one Evaluation Pipeline entry, and one Evaluation Catalog entryAs per current practice and discussed throughout this document, one Evaluation requires one Evaluation Management Committee, one Pipeline entry, and one Evaluation Catalog entry.

Evaluation methodology refers to the analytical method by which impacts, results, or findings are derived. The methodology used informs the evaluation type: impact, performance, and multiple. For impact evaluations, the methodology (or methodologies) defines the identification strategy by which a counterfactual is estimated to measure the causal impact(s) of the program. For performance evaluations, the methodologies are somewhat less standardized and the clearest one is pre-post, where results are estimated as a change over time, before and after the intervention. The methodology is a critical piece of information to consider when interpreting results. Evaluation methodology is distinct from, but supported by, the data collection methods. In other words, qualitative methods such as focus group discussions and key informant interviews are not an evaluation methodology, they are data collection methods in support of the evaluation methodology. One Evaluation may employ multiple evaluation methodologies, as well as data collection methods, across evaluation questions. The Evaluation Design Report should document in detail the full set of methodologies and data collection methods to be used, as requested in its template. When many different approaches are employed, evaluators should indicate the “primary” methodology, i.e. the one used to measure the program objective for the purpose of evaluation summary materials like the Evaluation Brief.

One Evaluation may require multiple methodologies

Impact

Impact evaluations (IE) are designed to measure statistically significant outcome changes that can be attributed to the MCC investment. This approach requires distinguishing changes in outcomes that resulted from MCC’s investment and not from external factors, such as increased market prices for agricultural goods, national policy changes, or favorable weather conditions. Changes resulting from external factors would have occurred without MCC’s investment and should not be attributed to MCC’s impact. Impact evaluations compare what happened with the MCC investment to what would have happened without it through the explicit definition and measurement of a counterfactual.The following methodologies comprise the category of impact evaluations: (i) Random Assignment; (ii) Continuous Treatment; (iii) Difference in Differences only; (iv) Matching only; (v) Difference in Differences with Matching (vi) Interrupted Time Series; (vii) Regression Discontinuity, and (vii) Other Impact (i.e. other quasi-experimental methods).

Performance

Performance evaluations (PE) estimate the contribution of MCC investments to changes in outcome trends, including farm and household income, when formal measurement of a statistically valid counterfactual is not feasible. Performance evaluations cannot attribute outcome changes to specific causes, including MCC’s investments. However, they often provide crucial insights into strengths or weaknesses in program implementation through critical empirical and analytic assessment of the measurable components of the program’s intermediate and ultimate outcomes. They are also useful to compare changes in the situation before and after MCC’s investment and provide details on how and why (or why not) an investment might have contributed to changes in outcomes. Furthermore, they can often identify clear opportunities to improve program implementation and investment decisions, even when they cannot explicitly estimate how an investment might have contributed to changes in beneficiary incomes.The following methodologies comprise the category of performance evaluations: (i) Pre-Post;[[MCC considers all approaches that rely on a comparison between conditions existing before and after program implementation to fall under this category; recognizing that there are many variations of Pre-Post methods, ranging from comparing two rounds of data to conducting time series analysis.]] (ii) Ex-Post thematic analysis;[[MCC considers this category to include retrospective evaluations that draw conclusions about results solely on post-program data. It generally includes qualitative assessments, such as case studies, but may incorporate quantitative data.]] (iii) Pre-Post/with Comparison Group;[[This methodology has been applied in past MCC evaluations but is expected to be used sparingly going forward. The value of collecting data on a comparison group that cannot be considered a valid counterfactual must be justified.]] (iv) Modeling;[[MCC considers this category to reflect results that are modeled, based on existing literature or sector-specific models, rather than directly measured. The Highway Development Model widely used in roads projects is an example of this.]] and (v) Other Performance.

Multiple – Impact and Performance

This evaluation type is only at the evaluation-level and is used when an evaluation includes both impact and performance evaluation methodologies to measure distinct components or outcomes of the program. This situation may arise when part of the logic is being evaluated with an IE, while the rest is evaluated with a PE. Or when a program implements slightly (or significantly) different interventions in different places, under the banner of a single project (e.g. in a grant facility), and different evaluation approaches are used for different project sites. In these cases, the Evaluation Design Report should present how results will be estimated using varying methodologies to make an overall evaluative determination. If the results drawn from the multiple methodologies cannot be combined to produce a program-level conclusion, then perhaps the two (or more) methodologies should actually be considered different evaluations. Note that this classification should not be used when an IE incorporates PE methods to complement or enhance the IE. For example, if an IE incorporates a process evaluation of implementation (as it should), it would still be classified as an IE. Similarly, if an IE incorporates a qualitative interim study to better understand dynamics influencing results, it would still be classified as an IE.To Be Determined

This is the classification used when it is known that an evaluation will be commissioned, but not whether or not an impact and/or performance evaluation is the most appropriate and feasible type of evaluation.No Independent Evaluation

This is the classification used when MCC determines it is not feasible or cost-effective to conduct an evaluation. For an evaluation to receive this classification, there must be an EMC Memo, cleared by MCC M&E Management, summarizing MCC’s decision about evaluation and the rationale[[This practice has been in place since January 2020.]].Evaluation Life Cycle

Every evaluation is expected to follow a similar lifecycle:- Evaluation Planning[[Evaluation plans are documented in the Investment Memo, Compact or Threshold Agreement, and M&E Plan.]]

- Development of SOW

- Contracting

- Design

- Baseline Data Collection (optional) – This does not occur for ex-post evaluations and even prospectively designed evaluations may not require primary baseline data collection by the evaluators.

- Baseline Report (optional) – see above

- Interim Data Collection (optional) – Not all evaluations require interim data collection and reporting.

- Interim Report (optional) – see above.

- Final[[The terms “final” and “endline” evaluation are synonymous.]] Data Collection

- Final Report

- Contract Closeout[[Contract closeout requires confirming the receipt of all evaluation deliverables and then appropriately storing/documenting them in M&E filing systems, the pipeline, and the Evaluation Catalog; paying the final invoice, drafting the final CPARS form, de-obligating any remaining funds; and preparing a final contract modification.]]

- Completed

Note on Completed Evaluations

To be considered Complete, an Evaluation must have an Evaluation Design Report (EDR) and at least one analysis report (Interim and/or Final), at least one Evaluation Brief and MCC Learning covering the report, data documentation that has been approved by MCC’s Disclosure Review Board (DRB) (when feasible), and a closed out evaluation contract. The contract closeout stage will continue beyond the delivery of the final report package and contract end date due to delays in invoicing and MCC Administration &Finance’s annual de-obligation and closeout procedures. There are a few cases in which MCC ends an evaluation before it completes the planned lifecycle – i.e. before the Final Data Collection/Report milestones. In these cases, the interim data and/or report provide sufficiently comprehensive findings about whether or not the project achieved its expected results and additional investment in Independent Evaluator data collection and analysis on the same or longer-term outcomes is no longer considered cost-effective. The evaluation is still considered complete because it has achieved its objective of measuring the results of the project for learning and accountability (See Namibia Communal Land).To be considered Complete, an evaluation must have an Evaluation Design Report (EDR) and at least one analysis report (Interim and/or Final), at least one Evaluation Brief and MCC Learning covering the report, data documentation that has been approved by the Disclosure Review Board (when feasible), and a closed out evaluation contract.

Note on Cancelled Evaluations

This is the classification used when an evaluation was contracted but due to one or a variety of project and/or evaluation-related reasons, MCC canceled the evaluation and it is considered incomplete (i.e. does not meet the criteria described in the previous subsection). At this point, the evaluation can be transferred to a new evaluator, relaunched/rebid with a new design, or in rare cases remain incomplete. For an evaluation to receive this classification, there must be a Cancellation Memo, cleared by MCC M&E Management, summarizing MCC’s decision about the evaluation and the rationale[[This practice has been in place since January 2020.]].MCC, MCA, Evaluator Roles and Responsibilities

MCC, MCAs, and the Independent Evaluator - as well as other key stakeholders - play critical roles in designing, implementing, and disseminating the independent evaluations. Their roles and responsibilities are defined in the Evaluator Scope of Work (SOW) for each evaluation contract. Generally, they are:Millennium Challenge Corporation (MCC): MCC is responsible for oversight of the Independent Evaluator and quality control of evaluation activities, including the following specific responsibilities:

- Assess when a program is ready for evaluation planning through evaluability assessment;

- Determine what program components (Project(s), Activities, Sub-Activities) will be covered by the evaluation;

- Set the evaluation questions to achieve accountability and learning objectives;

- Document the planned scope and timing of the evaluation in the M&E Annex of the Compact or Threshold agreement and update it in the program M&E Plan;

- Build buy-in and ownership of the evaluation;

- Contract and supervise the Independent Evaluator;

- Conduct quality reviews of all evaluation products (reports, questionnaires, etc.);

- Facilitate public dissemination efforts to inform decision-makers on results and learning generated by the evaluation;

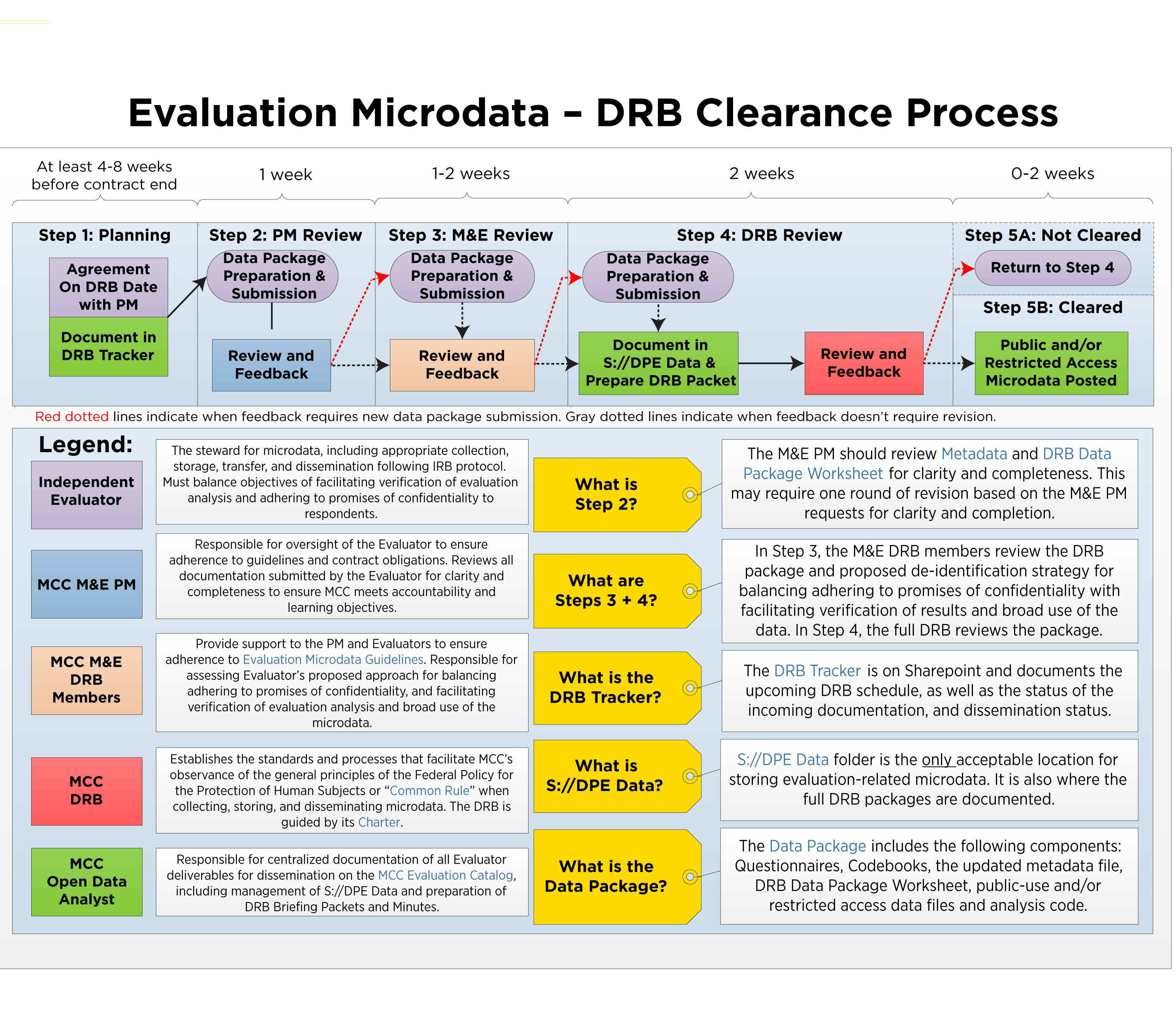

- Oversee preparation of public-use data that ensures appropriate balance of enabling verification of analysis and broad use of the data with adherence to promises of confidentiality to survey respondents; and

- Determine and manage the evaluation budgets.

- Assess when a program is ready for evaluation planning through evaluability assessment;

- Set the evaluation questions to achieve accountability and learning objectives;

- Document the current evaluation plan in the M&E Plan;

- Build buy-in and ownership of the evaluation;

- Manage the data collection firms (as applicable, during the program implementation period);

- Conduct quality reviews of all evaluation products (reports, questionnaires, etc.); and

- Facilitate and lead public dissemination efforts.

- Assess the completeness and credibility of the program logic and the appropriateness of proposed evaluation questions through evaluability assessment;

- Finalize evaluation questions to meet needs and demand from MCC and country partners;

- Develop the most rigorous evaluation design feasible given rules of program implementation;

- Support MCC and MCA to build buy-in and ownership of evaluation;

- Develop evaluation materials that are held to international standards;

- Ensure Institutional Review Board clearance of survey protocols;

- Ensure execution of the evaluation and data collection comply with all applicable laws and regulations;

- Manage the data collection firms (as applicable);

- Ensure data quality during collection and entry through supervisions and management;

- Lead data cleaning, analysis, and interpretation of results;

- Produce high quality, credible, transparent evaluation reports;

- Lead preparation of public-use data that ensures appropriate balance of enabling verification of analysis and broad use of the data with adherence to promises of confidentiality to survey respondents; and

- Lead public dissemination efforts.

Managing Evaluations

Objectives

The objectives of the EMG, with respect to managing evaluations, stem from the core lessons learned from the “First Five Ag” impact evaluations (also reference ”Learning from Evaluation at the MCC” Sturdy, Aquino, and Molyneaux 2014):- Ensure clearly defined program logic. This requires clearly defining the inputs, outputs, outcomes, and goals of each intervention in a way that demonstrates how the intervention will lead to expected results. For this, there must be common understanding across stakeholders on the problem diagnostic, program logic, targeted populations and their geographic locations, metrics for measuring results, and risks and assumptions. This should also facilitate early determination of how many evaluations will be required to evaluate any Program’s program logic(s).

- Ensure early integration of evaluation with program design and implementation. The evaluation methodology and timeline are often closely linked to program design and implementation. This requires close collaboration between M&E and Operations (both in MCC and MCA) as early as possible in the process once the essential elements of the project (e.g. who will be targeted, where, how, and what are the essential project design elements) are defined, and then continuously throughout Compact/Threshold development, implementation, and Post-program.

- Ensure evaluations are structured for learning and work toward establishing a feedback loop. This requires identifying the consumers of evaluation results and ensuring a feedback loop is established so that evaluation results can inform future decision-making.

- Ensure appropriate quality control mechanisms are in place. This requires making sure that the appropriate stakeholders are reviewing key evaluation deliverables, such as evaluation design reports, and assessing risks to the interventions and their evaluations, in a timely manner.

- Who is involved? This guidance identifies the appropriate staff from Management, Operations, M&E, and Economic Analysis to review critical evaluation products/deliverables to ensure accountability and learning objectives are met and weighed against the expected costs.

- When and how do they need to be involved? This guidance defines the critical milestones in every evaluation life cycle when the EMC reviews and provides feedback on evaluation products/deliverables.

- What are the risk factors? This guidance defines the risk factors facing each evaluation at each milestone to facilitate MCC’s assessment as to whether the expected benefits of the evaluation are worth the costs. These risk factors often accumulate over time.

Evaluation Management Committee (EMC)

The EMC is established to ensure that the design, implementation, and learning from evaluations involve input from key stakeholders across MCC. Engagement with these stakeholders is critical to ensuring evaluation findings are relevant and actionable for future decision-making. An EMC comprised of one Chair and five or more Committee members is established for each Evaluation. It should be established as early in compact/threshold development as necessary for each Evaluation, and members should expect to participate in the EMC for the duration of the Evaluation life cycle (6+ years). If an EMC member must leave the EMC, the Chair, in collaboration with the Department of Policy and Evaluation (DPE) and Department of Compact Operations (DCO) Management, must identify the appropriate replacement.Chair. The MCC M&E Managing Director (or designated representative) is responsible for decisions related to investments in the Evaluations. Using input from EMC members, the Chair decides if the expected benefits of the evaluation outweigh the costs.

Members[[Given that participation on the EMC will require staff time and is crucial to the evaluation quality assurance process, MCC should include participation on the EMC as part of Committee members’ performance plans.]] Monitoring and Evaluation (M&E) Lead. The M&E Lead’s aim is to produce high-quality, relevant, and timely evaluations that meet MCC and partner country stakeholders’ needs related to accountability and learning. It is also to keep the evaluation contract on time, scope, and budget. The M&E Lead is responsible for overall management of assigned independent evaluation activities and ensuring common understanding of the program logic(s) and evaluation questions, contracting and managing evaluator(s), and ensuring quality control for evaluation materials and reports.

Evaluation Lead. The Evaluation Leads are responsible for: (i) technical quality assurance in EMC reviews, (ii) contract management oversight and backstopping, (iii) serving as a knowledge management resource during program and evaluation development, and (iv) serving as a sector liaison with internal and external stakeholders. More detail on the role of Evaluation Leads is included in Annex 1 Evaluation Leads Roles and Responsibilities. Given the size and scope of some sectors, MCC M&E Management has assigned multiple Evaluation Leads to cover certain sectors. It should be noted that some evaluations may be multi-sectoral, in which case MCC M&E Management designates the primary Evaluation Lead to cover the evaluation, and any other sectoral evaluation expert will be included in EMC reviews as an EMC member[[Examples of this include Agriculture and Irrigation Activities that include a Land component; and FIT-led Activities that focus on a specific sector (such as Energy, WASH, and Transportation).]]. The following summarizes Evaluation Leads by sector:

| MCC Evaluation Sectors | Evaluation Lead(s)[[As of November 2023.]] |

|---|---|

| Agriculture & Irrigation | Sarah Lane |

| Capacity Building and Institutional Development | Cindy Sobieski |

| Education | Jenny Heintz |

| Energy | Cindy Sobieski |

| Finance, Investment, and Trade (FIT)[[Note that this sectoral grouping is intended to capture programs that target FIT outcomes. In many cases, MCC programs have a FIT component or are led by FIT staff, but are focused on a broader sector, such as Energy or Education. In these cases, the program evaluations fall under the broader sector and not FIT in terms of Evaluation Lead oversight.]] | Jenny Heintz |

| Health | Jenny Heintz |

| Land | Sarah Lane |

| Transportation | Cindy Sobieski |

| Water, Hygiene, and Sanitation (WASH)[[Note that these sectoral grouping differ from the sectors noted in MCC’s website in some cases, e.g. Water includes both WASH and irrigation. M&E’s groupings aim to more closely map to similar theories of change.]] | Jenny Heintz |

Sector/Technical Operations Lead(s). The Sector/Technical Operations Lead(s) is responsible for defining program logic, assumptions, evaluation questions, and identifying users of evaluation results. They should also pay close attention to survey questions to ensure that key terms and concepts are defined appropriately and that the right questions are asked. Please note, in the post-program period, the DCO Sector Practice Lead will be requested to confirm/identify appropriate Sector Lead(s) for reviewing materials.

Cross-cutting Lead(s). Depending on the scope of the program being evaluated, DCO cross-cutting staff should be included in the EMC. The most common practice groups in this category are Gender and Social Inclusion (GSI), Environmental and Social Performance (ESP), however others may also have a stake in the evaluation. Err on the side of being more inclusive in the EMC.

Country Relations. One representative from the Country Team Lead (CTL)/Resident Country Mission (RCM) is responsible for assessing policy relevance, understanding country context, and contributing to risk assessments. In the post-program period, the DCO Regional Managing Director will be asked to identify a designated representative.

In addition to the above, MCC M&E Leads must always reference the Evaluation Review Matrix[[Evaluation Matrix updated periodically with staffing changes.]] to confirm who should see what at each milestone. M&E and DCO agreed to the practice of copying members of Management on certain EMC reviews when the EMG was first developed and launched. M&E copies Management to keep them aware of ongoing evaluation work and give them an opportunity to provide feedback at critical points in the evaluation, particularly ahead of the VP review of interim/final reports. This measure is also intended to prevent requests for revisions or other delays during VP review. The MCC M&E Lead is responsible for documenting review dates, decisions, and feedback using tools like the Evaluation Master Tracker.

Evaluation Management Committee (EMC) Documentation

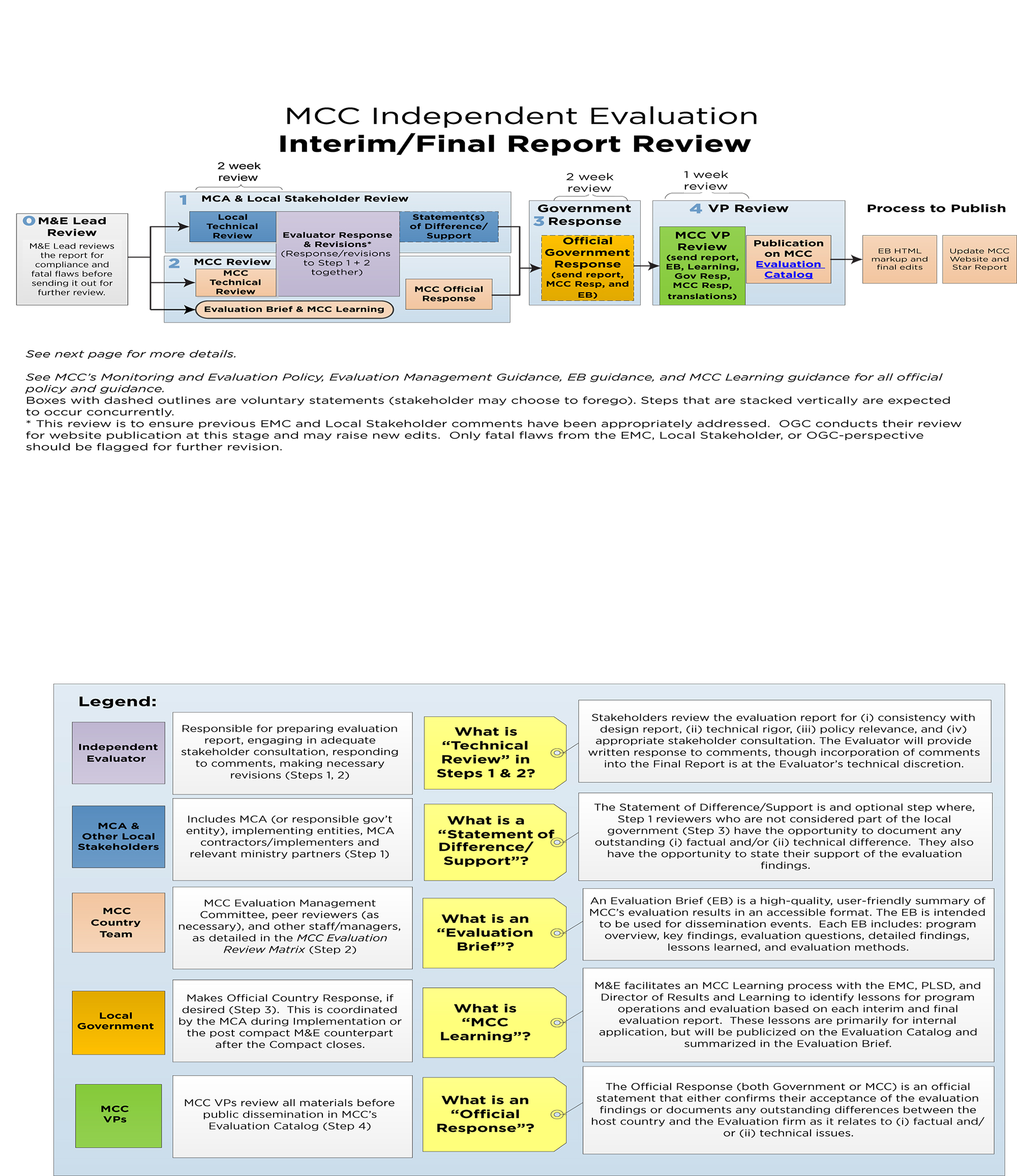

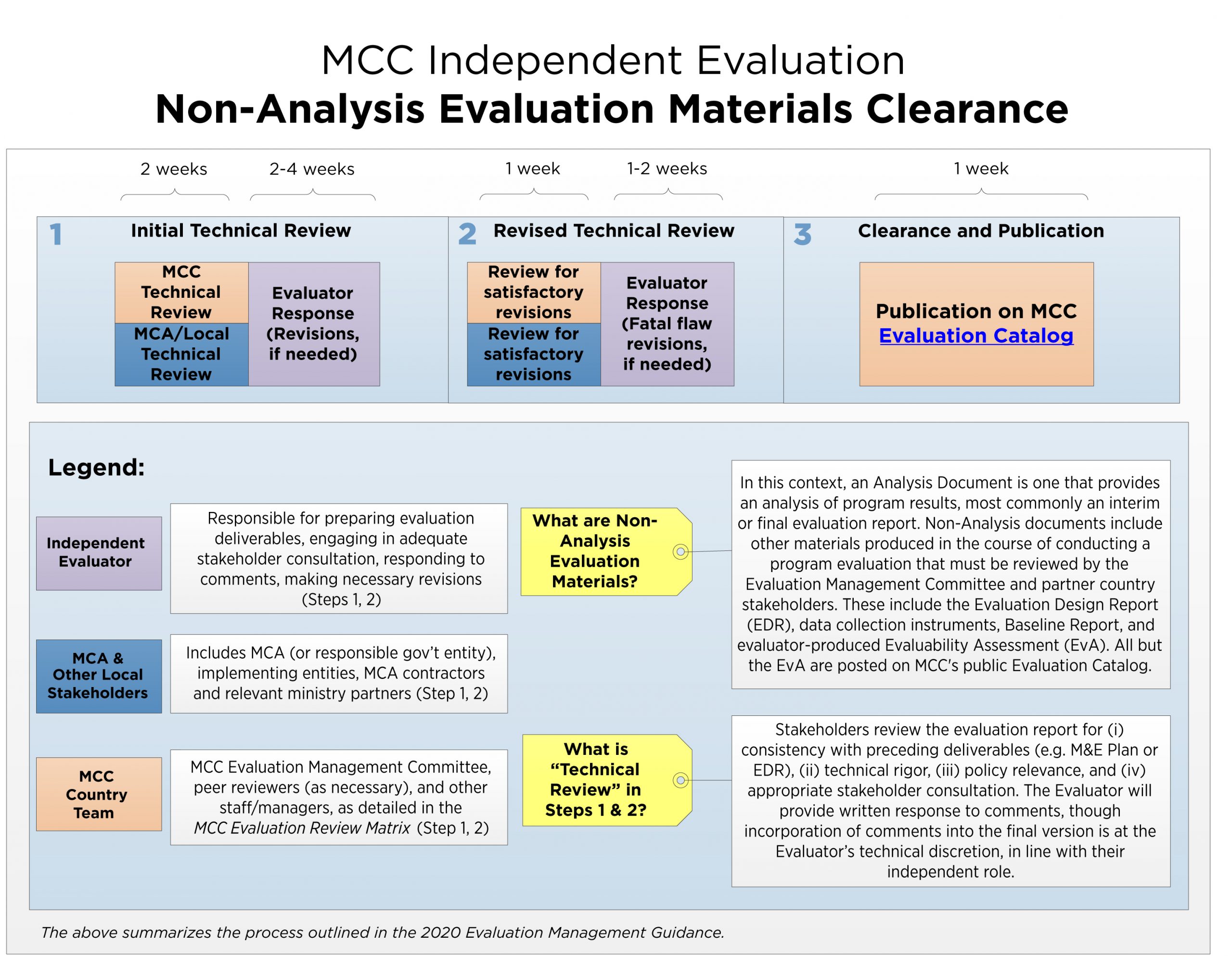

When an evaluation product/deliverable is ready for review, the MCC M&E Lead will distribute or present the evaluation product/deliverable to the MCC EMC for review and comment. The MCC M&E Lead is responsible for compiling all EMC members’ comments into one comprehensive summary table. For the Evaluator SoW, Evaluability Assessment and Data Collection Instruments, M&E Leads should document EMC feedback internally only (feedback on the latter two is shared with the evaluator). For the Evaluation Design Report, Baseline Report, and Interim/Final Evaluation Report, EMC feedback will be made public with the final version of the report. M&E Leads should compile and review all comments before sending to the evaluator to eliminate repetition, assess whether comments are within the evaluation’s scope, and provide guidance on how the evaluator might address particular comments, given time or other resource constraints. M&E Leads must balance the need to be responsive to EMC input with the need to manage the evaluation’s quality, timeline, and budget. As per the Evaluator SOW, the Independent Evaluator is then responsible for documenting all local stakeholder and EMC feedback and their response to that feedback in an Annex of the Report, which will be publicly posted on the Evaluation Catalog.M&E Leads must balance the need to be responsive to EMC input with the need to manage the evaluation’s timeline and budget.It is important to remind all reviewers that their comments will be made public when circulating reports for review. The comments annex should state the reviewer’s organization and role and exclude their name. Once submitted by the evaluator, the final product/deliverable with comments and responses will be sent back to the MCC EMC. The MCC EMC will provide either Clearance or No Clearance on non-analysis evaluation product/deliverables – Evaluability Assessment, Design Report, Questionnaires, and Baseline Report. (Annex 2 Clearance Process). The process is as follows:

- Initial Technical Review – Country stakeholders and the EMC review the materials and provide written comments (materials can be circulated for review to all stakeholders/EMC members at the same time).

- Evaluator Revision – Evaluators should review feedback, provide a written response to all comments, and revise the report accordingly.

- Revised Technical Review – Country stakeholders and the EMC review the evaluator’s response to their comments and the report revisions to ensure their feedback has been adequately addressed. At this stage, only fatal flaws should be considered for further revision by the evaluator.

- Finalization and/or Publication on Evaluation Catalog

- Initial Technical Review – Country stakeholders and the EMC review the materials and provide written comments (materials can be circulated for review to all stakeholders/EMC members at the same time).

- Evaluator Revision – Evaluators should review feedback, provide a written response to all comments, and revise the report accordingly.

- Revised Technical Review – Country stakeholders and the EMC review the evaluator’s response to their comments and the report revisions to ensure their feedback has been adequately addressed. At this stage, only fatal flaws should be considered for further revision by the evaluator.

- Partner Government Response – Representatives of the partner government (can be MCA CEO during implementation or the post-program M&E Point of Contact after the program has closed) review the finalized report package consisting of the report (with required annexes), Evaluation Brief, and draft MCC Response and provide an optional response.

- MCC VP Review – MCC VPs review the finalized report package, including the partner government response, and accept the draft MCC response.

- Publication on Evaluation Catalog

The Independent Evaluator is responsible for documenting all local stakeholder and EMC feedback and their response to that feedback in an Annex of the Report, which will be publicly posted on the Evaluation Catalog.In the event of disagreement on how to proceed - as documented in the comments by MCC EMC Members and/or any remaining disagreement with local stakeholders - the Chair is the final decision maker and will confer with any relevant Management/staff to reach agreement on next steps.

Evaluation Milestones

There are up to seven key milestones in the evaluation life cycle when the EMC convenes to review and assess the evaluation products/deliverables. Recall that country counterparts (MCA staff, implementing entities in government, implementers, and/or post-program government representatives) should also be given the opportunity to review these same products/deliverables. The milestones[[Some evaluations may not have baselines. Some evaluations may include one or more midline/interim studies as well. Any midline/interim reports should follow the same review and clearance procedures as endline/final reports.]] are:Evaluation Design Report The EMC must agree on the final set of evaluation questions, key measures, scope and timing of data collection, alignment with the CBA, and evaluation budget.

| Milestone | Objective |

|---|---|

| MCC-led Evaluation SOW | The EMC must agree on the questions that will serve as the foundation for the evaluation, the key staff that will comprise the evaluation team, and the proposed budget. |

| Evaluability Assessment | The EMC should reflect on the evaluator’s assessment of the program logic, including key risks and assumptions as well as the underlying literature and evidence, and respond to suggestions for logic revisions or changes to the evaluation questions. |

| Baseline Materials and Data Collection | This is the EMC’s opportunity to understand the data that will be analyzed and reported to ensure that it is being collected in the right way (e.g. using appropriate terminology, reflects correct understanding of program interventions and aims, etc.). Sector input is particularly important here. |

| Baseline Report + Data | The EMC must review for accuracy and to ensure that learning is understood and shared across the agency. |

| Interim/Final Materials and Data Collection | This is the EMC’s opportunity to ensure that any concerns they had about missing data or the way data was collected at baseline are addressed. Sector input is particularly important here. |

| Interim/Final Report + Data | The EMC must review for accuracy and to ensure that learning is understood and shared across the agency. |

Evaluation SOW + IGCE

As per MCC’s M&E Policy, MCC uses an Evaluability Assessment tool to determine whether or not the Project is ready for evaluation planning based on available documentation on the five core areas of any project design: (i) problem diagnostic with sufficient supporting data/evidence; (ii) project objectives and theory of change/logic with sufficient supporting data/evidence; (iii) project participants clearly defined and justified in terms of geographic scope and eligibility criteria; (iv) metrics for measuring results for both accountability and learning clearly defined; and (v) risks and assumptions clearly defined with potential risk mitigation strategies. In addition to the evaluability assessment, the following should be considered when deciding when to procure an evaluator:- Clarity and firmness of program design – the evaluator should only be procured once there is sufficient detail around which to design an evaluation (e.g. evaluation questions, power calculations, survey questions). The evaluability assessment focuses on this dimension. Another related consideration is whether the design is sufficiently finalized. If design decisions are still being discussed, implementation feasibility and costs are still being explored, and/or a compact reallocation is likely, it may be too early to hire an evaluator.

- Timing of program implementation and the need for baseline data – if it is clear that primary baseline data collection will be necessary to effectively evaluate the program, then the evaluator must be hired with sufficient lead time to be able to design the evaluation and implement the baseline study prior to start of program exposure (see below). On the other hand, if exposure will not begin until completion of program implementation, it may not be necessary to hire an evaluator until late into the implementation period.

- Facilitating an impact evaluation – certain impact evaluation designs require adherence to rules of implementation, e.g. selection of program areas or participants, and these rules must be agreed to by MCC, MCA, implementers, and evaluators prior to the start of program implementation. It may be necessary to hire an evaluator early enough to participate in design discussions such that the needs of the evaluation are duly incorporated.

| Program treatment and the exposure period should be defined in program design documents. The cost-benefit analysis also defines the exposure period by establishing the year when targeted benefits will start accruing and should align with program design documents. So, what is treatment and what is an exposure period?

Program Treatment refers to the interventions that program participants and/or beneficiaries are exposed to and can be defined differently depending on the program. In a teacher training program, for example, treatment may be defined as training and so exposure to treatment begins the first day of training. In an electricity infrastructure project, treatment may be defined as community access to the grid, so exposure to treatment would not begin until the grid infrastructure is built and energized. It is important for evaluations to understand and document the program treatment that is being evaluated to define this treatment start date. The required exposure period is the period of time a participant needs to be exposed to a program (i.e. “treatment”) to reasonably expect to observe the intended impact on outcome(s). The required exposure period is an important piece of information to consider when reviewing evaluation design and results as it directly informs when data is collected post-treatment to estimate impacts on outcome(s). For example, depending on the treatment, context, and the outcome being measured, a one-year exposure period may be too short or too long. In many evaluations, there may be a range of actual exposure periods because program implementation does not occur at the same time in all places, and sometimes spans years. Additionally, different outcomes may be measured following different required exposure periods, informing the timeline for interim and final data collection and results. Exposure periods may need to be defined both for interim and final evaluation data collection. |

Examples of risks facing the independent evaluation at this stage include:

- Incorrect definition of the Project components that should be covered by the evaluation; incorrect identification of type of evaluation that should be used;

- Hiring the Independent Evaluator too early, resulting in unnecessary costs; or too late, which may affect quality of evaluation;

- Incomplete/inaccurate prioritization of evaluation questions and outcomes;

- Incomplete/inaccurate definition of evaluation team qualifications, and

- Underestimating the costs of the evaluation.

At this stage, MCC M&E will determine the usefulness of including an evaluator-led Evaluability Assessment into the Evaluation SOW as a separate deliverable, or as an annex to the Evaluation Design Report (discussed below). Some considerations:

- MCC has found evaluator-led Evaluability Assessments to be useful as an initial step for ex-post evaluations of closed projects. A strong example of this type is the Morocco I Small-Scale Fisheries by Mathematica Policy Research.

- MCC has found evaluator-led Evaluability Assessments for prospective evaluations to be varying in terms of benefits compared to costs. Some have been useful and complementary to the development of a Design Report (e.g. Georgia II – Improving General Education Quality), while others were less so and the evaluability dimensions may have been more appropriately considered within the Design Report rather than as a preceding deliverable.

Evaluator-led Evaluability Assessment

As discussed above, evaluators should conduct an Evaluability Assessment either as an initial contract deliverable or as part of the Evaluation Design Report. In either scenario, the aim of the Evaluability Assessment is to document an independent assessment of program design. This assessment will serve to inform decisions about the evaluation questions and design. For example, if the evaluator’s assessment is that the program logic is not founded in reliable evidence, then the EMC should consider whether the entire program logic can be evaluated cost-effectively. Further, a frank assessment of the evidence and justification contributes to the accountability and learning goals of independent evaluation.Evaluators should use the Evaluability Assessment tool as a guide for the content of the assessment, but the tool should not dictate the format of this deliverable. Generally, requesting a report that speaks to the five dimensions of evaluability has produced better results than requesting evaluators to fill out the tool. Given that there is no template for the Evaluability Assessment report, M&E Leads should guide evaluators so that the report is fit-to-purpose for the particular project team/EMC/evaluation.

To-date, MCC has not made Evaluability Assessments public on the Evaluation Catalog (except if they are part of the Evaluation Design Report or if they serve as the final evaluation report[[Generally, the latter situation occurs when the program is not considered to be evaluable and no further evaluation work is commissioned.]]). The assessment goes through the EMC clearance process for non-analysis documents.

Evaluation Design Report

At this milestone, MCC M&E transfers ownership and leadership of the independent evaluation to the Independent Evaluator. The role of MCC and MCA/country partners is to provide timely feedback and comments to the Independent Evaluator to design the most rigorous, high quality, credible evaluation given project design and implementation rules.The Objective noted in the Compact/Threshold should be the key outcome around which the evaluation is structured/poweredThe MCC EMC will review the Evaluation Design Report (EDR) with the following objectives in mind: (i) prioritize evaluation questions and outcomes that meet demand from key decision-makers; (ii) ensure that the program Objective and all key accountability metrics modeled in the cost-benefit analysis are measured or justification is provided as to why not (note that the Objective noted in the Compact/Threshold should be the key outcome around which the evaluation is structured/powered); (iii) apply the most rigorous evaluation methodology feasible given project design and implementation rules; (iv) clearly define the analysis plan to ensure consensus on outcomes – their definitions and measurement; (v) clearly define sample population and sampling strategy that aligns with project target populations; (vi) clearly define exposure period that maps data collection timelines with project start date timelines; and (vii) ensure alignment between evaluation design and contract funding and initiate a budget modification, if necessary.

The risks facing the independent evaluation at this stage are a lack of understanding of the program logic and program implementation resulting in:

- Infeasible evaluation methodology;

- Lack of alignment between the target population and the evaluation sample population;

- Lack of agreement or understanding on required exposure period and necessary data collection dates; and

- Sample sizes that are not large enough to detect impact.

Baseline Materials and Data Collection

The EMC will review and provide feedback on the Baseline Materials (questionnaires, informed consent, survey protocols) with the following objectives in mind: (i) in cases where the treatment does not begin until the end of program implementation (e.g. infrastructure interventions), monitor and assess if project is being implemented according to plan (quantity, quality, and timing of outputs) to ensure evaluation methodology still follows project design and implementation (this would not be possible for programs where the implementation is the same as treatment, e.g. training); (ii) ensure that baseline data collection begins and ends as close as possible prior to the start date of the program implementation or exposure; (iii) review questionnaires to ensure that the appropriate data is collected and there is agreement on how outcomes of interest will be measured and who will be surveyed/interviewed to report on these outcomes. This is informed by monitoring best practices in questionnaire design across MCC to facilitate standardization in outcome measurement across evaluations in a sector; (iv) review data quality plan for training, field work management, and data entry; (v) ensure Independent Evaluator obtains all appropriate and required clearances (both local clearances and ethical review clearances); and (vi) review informed consent statement(s) to ensure appropriate promises of confidentiality (see MCC Guidelines for Transparent, Reproducible, and Ethical Data and Documentation (TREDD) for more details).In addition to risks raised above, the risks facing the independent evaluation at this stage are:

- The timing of the survey is too early (before project implementation and therefore context may change significantly prior to the true baseline) or too late (during or after project implementation so that pre-intervention data is no longer available);

- Survey instruments that do not capture the data required to answer the evaluation questions;

- Excessively long surveys that affect data quality;

- Missed opportunities for standardization in outcome measurement for MCC meta-analysis in a sector; and

- Incomplete/inaccurate definition of survey team quantity and/or quality.

Baseline Report + Data

Within 12 months of the completion of baseline data collection[[Some evaluations do not collect baseline data. For these evaluations, the evaluator should ensure the Evaluation Design Report validates the evaluation design using available trend data and other sources to justify the decision to use end-line data only.]], the Independent Evaluator will produce a Baseline Report. The MCC EMC will review the Baseline Report with the following objectives in mind: (i) assess if evaluation is being implemented according to plan (threats to internal validity if an impact evaluation; appropriate sample sizes and response rates); (ii) in cases where the treatment does not begin until the end of program implementation, monitor and assess if project is being implemented according to plan (quantity, quality, and timing of outputs); (iii) assess whether the baseline data supports the program logic, if project risks have materialized, and if assumptions are holding; and (iv) ensure appropriate management of data (see TREDD Guidelines for more details).In addition to risks raised above, the risks facing the independent evaluation at this stage are:

- Treatment and control/comparison groups that are not appropriately balanced (for example consumption, income, etc.);

- Significantly different context that can affect expected results;

- High non-response rates for a particular survey instrument (more than typical levels); and

- Problems with data quality (missing values and outliers) – to be managed by the evaluator and M&E Lead and flagged for the EMC as necessary.

| Following the TREDD Guidelines, de-identification and preparation of baseline or interim data only should be based on specific demands for the data. Unless there is explicit demand for the public-use baseline or interim data, the Independent Evaluator should prepare the data package with the Data De-Identification Worksheet for documentation purposes but will NOT conduct the de-identification and DRB review process until the Final Data Package. |

| In some cases, the first round of data collection in an evaluation will occur after the program has started and will assess interim outcomes or implementation in addition to establishing a baseline for future analysis. This most commonly occurs when the baseline study incorporates a process evaluation of implementation to-date. In these cases, the report includes analysis and is therefore considered a combination baseline/interim report. It should undergo the analysis report review process and the required accompanying documentation should be produced (e.g. an Evaluation Brief should be produced to summarize the evaluative findings, not the baseline data). The report will be recorded as the baseline in the pipeline but should be named appropriately in its title and the catalog. |

Interim/Final Materials and Data Collection

This section applies to Interim and/or Final Materials and Data Collection.The EMC will review and provide feedback on the Interim/Final Materials (questionnaires, informed consent, survey protocols) with the following objectives in mind: (i) assess if evaluation is being implemented according to plan (threats to internal validity if an impact evaluation; appropriate sample sizes and response rates); (ii) monitor and assess if project is being/was implemented according to plan (quantity, quality, and timing of outputs); (iii) ensure that final data collection begins AFTER the minimum agreed exposure period – i.e. the time required for the target population to be exposed to the program to produce the expected improvements/impact on outcomes; (iv) review questionnaires/survey instruments to ensure that the appropriate data is collected (particularly in relation to program design changes that may have occurred after baseline data collection) and that there is agreement on how outcomes of interest will be measured and who will be surveyed/interviewed to report on these outcomes (including those required to conduct an evaluation-based cost-benefit analysis, if required); (v) review data quality plan for training, field work management, and data entry; (vi) ensure Independent Evaluator obtains all appropriate and required clearances (both local clearances and ethical review clearances); and (vii) review informed consent statement(s) to ensure appropriate promises of confidentiality (see TREDD Guidelines for more details).

At this point, the evaluation needs to be monitored for all risks listed. MCC M&E will post the final Interim/Final Questionnaires on the MCC Evaluation Catalog.

Interim/Final Evaluation Report + Data

Within 12 months of the completion of interim/final data collection, the Independent Evaluator will produce an Interim/Final Evaluation Report. MCC has specified a review process (Annex 2) to manage the public release of Independent Evaluator reports that present results, whether Interim or Final.In addition to all previously listed risks, an additional risk to a high quality, credible evaluation to be considered when reviewing the Evaluation Report:

- Error or bias in analysis and/or interpretation of results.

- Lack of alignment of evaluation findings with evaluation questions, program Objective (stated in Compact/Threshold), and/or CBA.

Step 1 – Country Partner(s) Review. As with all previous key evaluation products/deliverables, the Independent Evaluator is responsible for sharing the Evaluation (Interim and/or Final) Report(s) with the MCA and country partners for review and comment. However, depending on the relationships with the partner government and the timing of the report, it may make sense for MCC M&E or MCA M&E to circulate the materials with the evaluators copied. The M&E Lead should facilitate the circulation of materials for review, which may be done virtually or through a local stakeholder workshop. Either way, the M&E Lead should ensure that all evaluation stakeholders are given an opportunity to review the report. It may be worthwhile gathering the contact information for stakeholders and/or data collection participants from the evaluator to include in the report review.

The purpose of this review is to ensure the Independent Evaluator has addressed any technical or factual errors in the report. For country stakeholders, during the Compact/Threshold period this should include the MCA CEO, MCA Sector Lead and MCA M&E Director, implementers, and implementing entities. In the post-program period, the MCA should designate appropriate representatives to review the report. Special consideration should be given to the appropriateness of these designated representatives and such individuals should be identified in the post-program M&E Plan. The MCA and any other necessary local stakeholders will provide written feedback that will be publicly documented in an Annex of the Report. The Independent Evaluator will provide written response to any comments and may elect not to take comments into consideration for the Evaluation Report. Any key stakeholder will then be given the opportunity to provide a public “Statement of Difference or Support” based on the evaluation firm’s report and response to feedback. The submission of the Evaluation Report(s) to MCC will include the following:

- Annex 1: Documentation of the comments/feedback from country and MCC stakeholders.

- Annex 2: Any “Statement of Difference or Support” from country stakeholders. Note that this is an optional statement and there is no template for it because it not a standard document. If a country stakeholder wants to write such a statement, they may draft it and ask for feedback from the MCC M&E Lead, or just submit to MCC M&E Lead for public posting.

- Annex 1: Documentation of the comments/feedback from country and MCC stakeholders.

Keep in mind: Based on previous evaluation reports, the average length of the Step 2 process has been 26 weeks for interim reports and 22 weeks for final reports[[Average review lengths based on evaluation pipeline data for completed interim and final reports as of November 2019.]]. Given that these steps require coordinating multiple rounds of reviews by others (EMC and partner country) as well as drafting or revising new content (MCC Learning and Evaluation Brief), it is important for the M&E Lead to plan ahead, set and stick to realistic review timelines, keep the EMC informed of progress, and reserve sufficient time to manage and complete tasks.

Step 3 – MCC submits package for final Country Review. Next, MCC M&E will send the following to the MCA, or designated representative: (i) Evaluation Report with required annexes, (ii) Evaluation Brief, and (iii) Draft MCC Management Response. The MCA will review and have the option to provide a public Official Country Response[[The Official Country Response is an official host-country statement that either confirms their acceptance of the evaluation findings or documents any outstanding differences of opinion between the host country and the evaluator as it relates to (i) factual and/or (ii) technical issues.]] to the Evaluation Report, if necessary. When providing the documents to the MCA or designated representative, the M&E Lead should clearly communicate the expected timeframe within which a response is requested. If, the deadline has passed and after repeated polite reminders, no response is received, the M&E Lead should inform the MCA or designated representative that MCC will be proceeding with final MCC management approval and public posting of the Report and associated documents.

Step 4 – Final submission to MCC VP Review. The MCC M&E Managing Director, or designated representative, will send the following to MCC VPs for DCO, DPE, OGC, CPA, as well as the Chief of Staff: (i) Evaluation Report with required annexes, (ii) Evaluation Brief, (iii) MCC Learning, (iv) Draft MCC Management Response, and (v) Official Country Response (if provided). MCC VPs will finalize the Official MCC Response Statement to be posted with the public Evaluation Report.

Step 5 – Public Dissemination. Following the formal review, the documents will be publicly posted on the MCC Evaluation Catalog and the Evaluation Brief will be posted on MCC’s Evaluation Brief web page. The M&E Lead should work with the Evaluation Brief contractor to produce the web version.

| Following the TREDD Guidelines, de-identification and preparation of the Complete Data Package should be developed within 6 months of the completion of the final Evaluation Report (Annex 3). |

| To facilitate the effective dissemination and use of MCC evaluation results and learning, MCC and the evaluator produce a 4-page summary called the Evaluation Brief[[The Evaluation Briefs have replaced the Summary of Findings, which had been developed to disseminate findings succinctly among other aims (including providing details on program logic, program financials, and monitoring indicators, and putting the evaluation in the context of the specific piece of a compact/project that it was evaluating). The decision to switch to Evaluation Briefs was made in 2018 and was informed by the fact that new MCC products (like the Star Report) have been launched and can serve some of the purposes originally served by the Summary of Findings (e.g. program description and indicator performance).]]. These briefs are intended to be easily understood by both technical (in terms of evaluation work) and non-technical audiences. They summarize the core of the program being evaluated, the main findings of the evaluation in line with the evaluation questions, the evaluation questions themselves, the MCC Learning, and the evaluation methods. An Evaluation Brief should be produced for all interim and final evaluation reports. The key findings on the first page of the Evaluation Brief will be the source for all evaluation summaries, including in the Sector Packages and Star Report. |

| In line with MCC’s commitment to learning, M&E should facilitate a process to identify lessons for program operations and evaluation based on each interim and final evaluation report. These lessons are primarily for internal application, though they will be publicized on the Evaluation Catalog and will be summarized in the Evaluation Brief. The lessons document is referred to as MCC Learning and it should be produced as a standalone document prior to the close of Step 2.[[These lessons were previously recorded within the Summary of Findings. The Evaluation Brief’s four-page structure does not accommodate the full set of lessons (which often run over a page), so MCC Learning is now its own document within the interim/final report package. The full lessons (or a sub-set) should be summarized in the Evaluation Brief.]] |

Mapping Risk Assessment and Evaluation Stages

Each MCC M&E Lead and the M&E Managing Director will lead annual Risk Assessments of the independent evaluation portfolio. MCC M&E Management will trigger a Risk Review round, however it is the responsibility of the MCC M&E Lead to continuously monitor and assess risks to raise with Management and the EMC. These Risk Assessments may identify additional points that require an MCC EMC decision meeting outside the milestones listed above, such as: (i) program re-scoping or re-structuring; (ii) significant deviations from original work plan; (iii) Independent Evaluator contract modifications; and/or (iv) loss of a counterfactual/Treatment group non-compliance/general threats to internal validity.The table below sets forth the types of risks that may arise at various stages of the evaluation.

| Risk | Evaluability Assessment | SOW | Evaluation Design Report | Baseline | Baseline Report + Data | Final | Final Report + Data |

|---|---|---|---|---|---|---|---|

| Incorrect definition of the Project components that should be covered by the evaluation; | X | X | X | X | X | X | X |

| Incorrect identification of type of evaluation that should be used | X | X | X | X | X | X | X |

| Hiring the Independent Evaluator too early resulting in unnecessary costs | X | X | X | X | X | X | X |

| Incomplete/inaccurate prioritization of evaluation questions and outcomes, | X | X | X | X | X | X | X |

| Incomplete/inaccurate definition of research team qualifications | X | X | X | X | X | X | |

| Underestimating the costs of the evaluation | X | X | X | X | X | X | |

| Infeasible evaluation methodology | X | X | X | X | X | ||

| Lack of program implementation fidelity | X | X | X | X | X | ||

| Lack of alignment between the target population and the evaluation sample population | X | X | X | X | X | ||

| Lack of agreement or understanding on required exposure period | X | X | X | X | X | ||

| Sample sizes that are not large enough to detect impact | X | X | X | X | X | ||

| Survey instruments that do not capture the data required to answer the evaluation questions | X | X | X | X | |||

| Missed opportunities for standardization in outcome measurement for MCC meta-analysis in a sector | X | X | X | X | |||

| Incomplete/inaccurate definition of survey team quantity and/or quality | X | X | X | X | |||

| Treatment and control groups that are not appropriately balanced | X | X | X | ||||

| Significantly different context that can affect expected results | X | X | X | ||||

| High non-response rates for a particular survey instrument | X | X | X | ||||

| Problems with data quality | X | X | X | ||||

| Error or bias in analysis and/or interpretation of results | X |