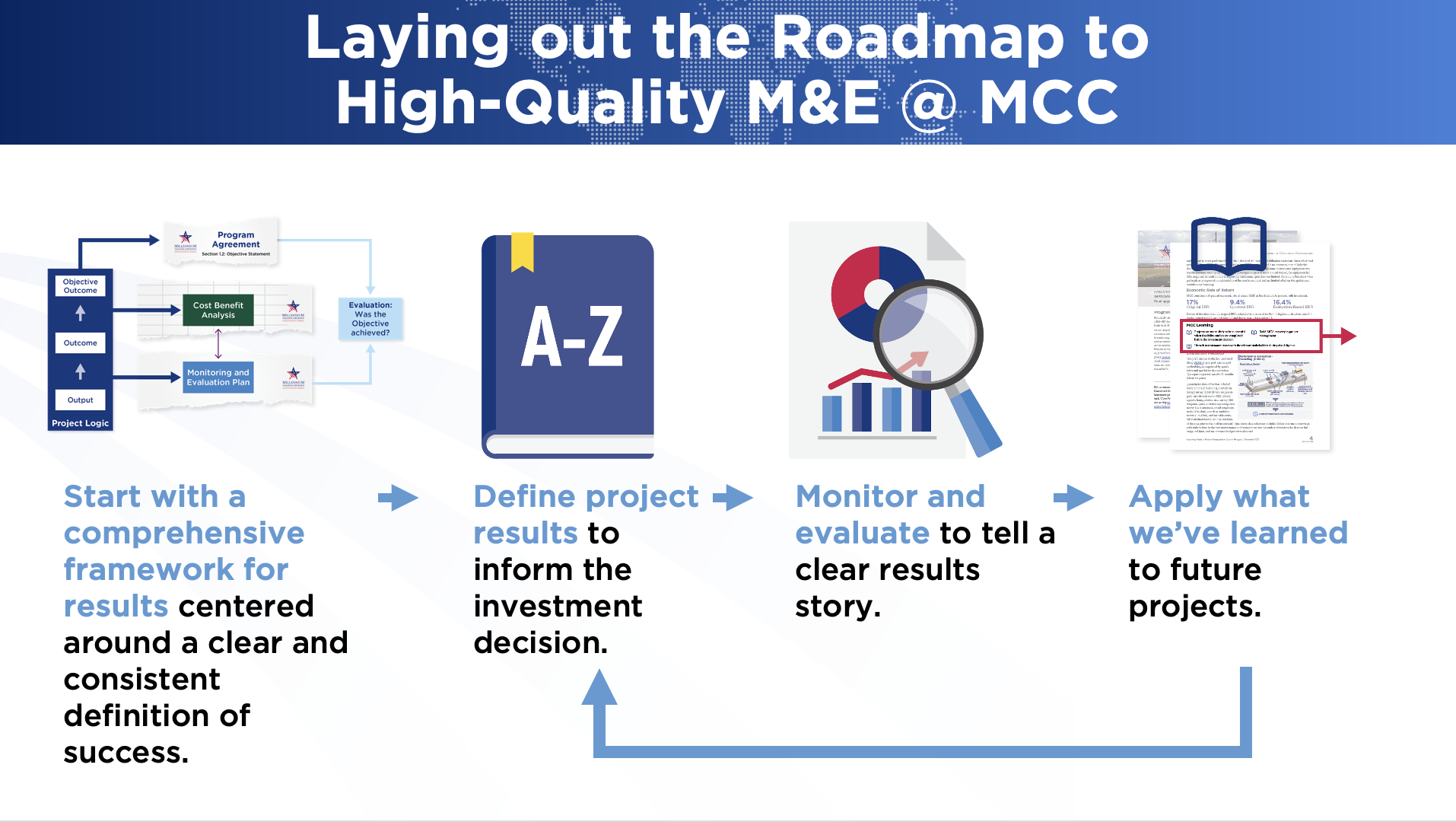

The four steps to achieving high-quality monitoring and evaluation.

A central theme to the policy revision is the need for greater collaboration between project designers and M&E. The policy now transparently lays out what is needed to produce high-quality M&E, giving project teams a goal to work towards.

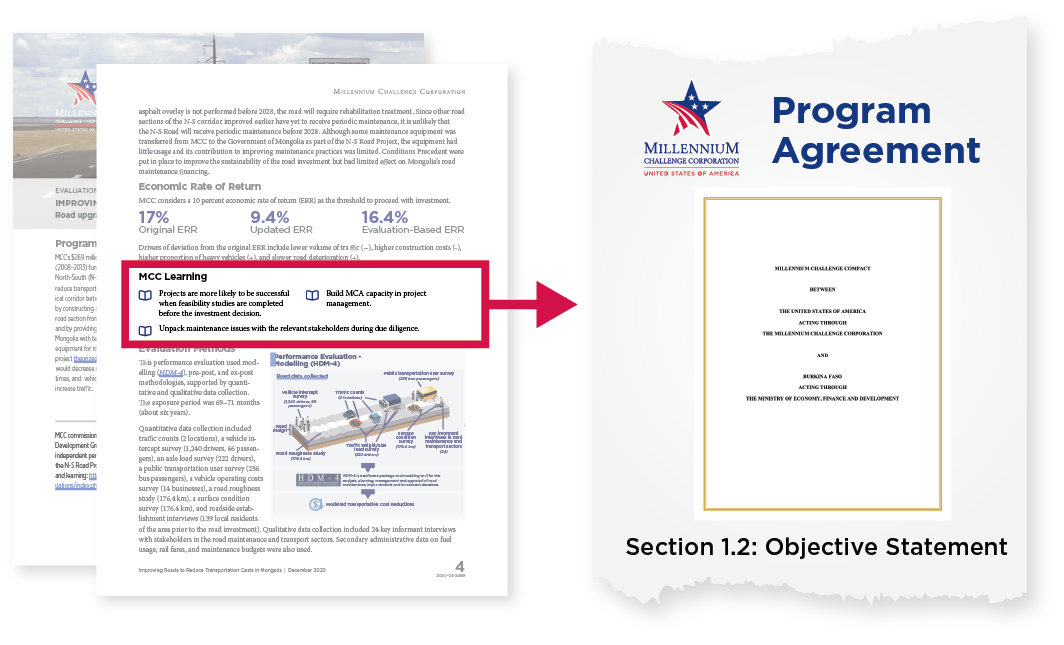

A comprehensive framework for results centers around a clear and consistent definition of success — the project objective.

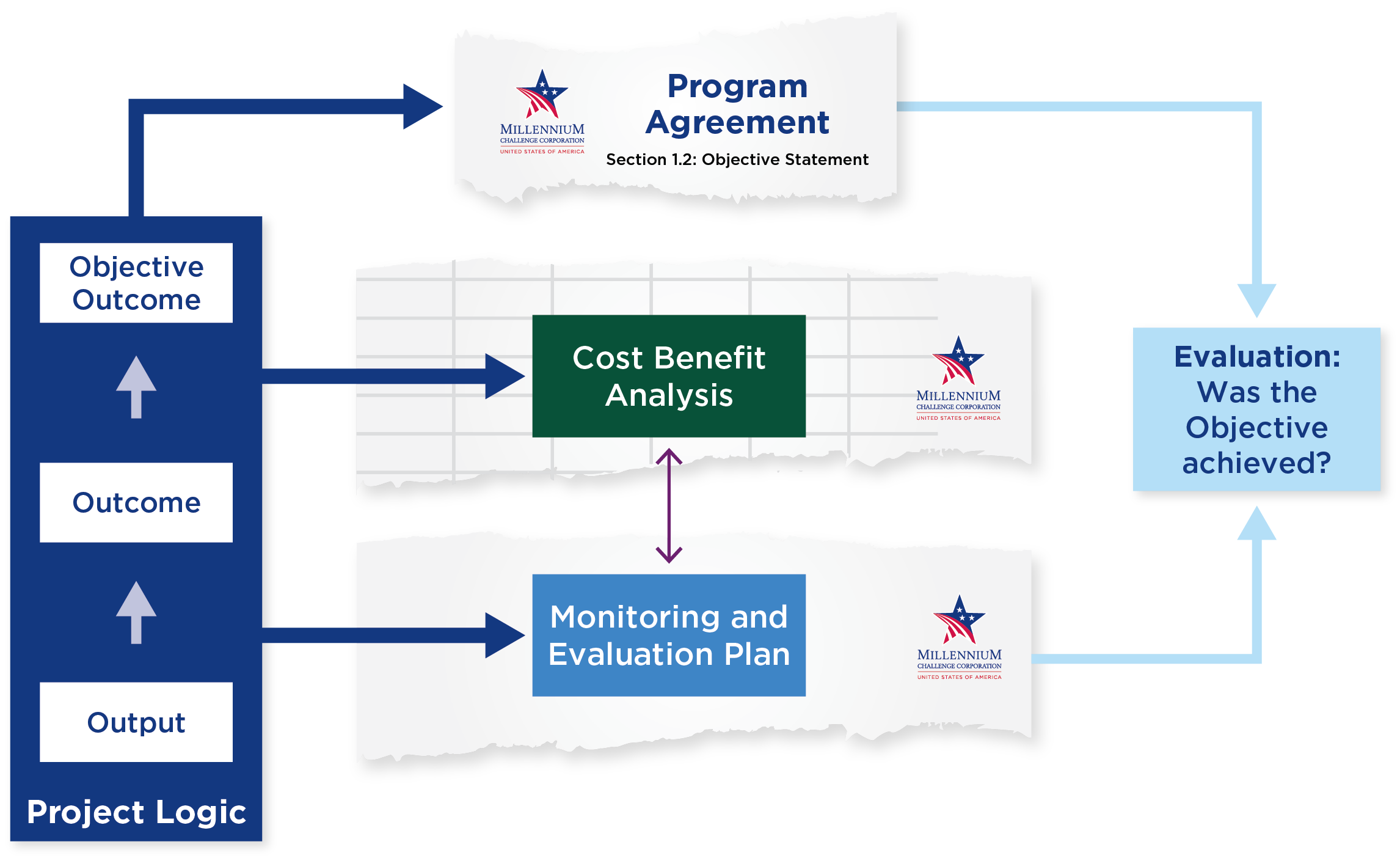

Before embarking on this policy revision, we commonly heard teams express confusion on how all the components of the MCC results framework fit together, including the project objective, project logic, indicators, cost-benefit analysis, and independent evaluation. So, this policy revision starts out by doing just that — laying out a comprehensive framework for results.

The project objective, or the definition of success of a project, is the focal point of the results framework. The framework tells a logical story about how project funds will be used to achieve intermediary results that ultimately lead to that vision of success. Each result is connected to an indicator in the M&E Plan, so we have a clear understanding and agreement around how to measure success and the path along the way. The evaluation analyzes these results to determine whether the project objective was achieved and why or why not.

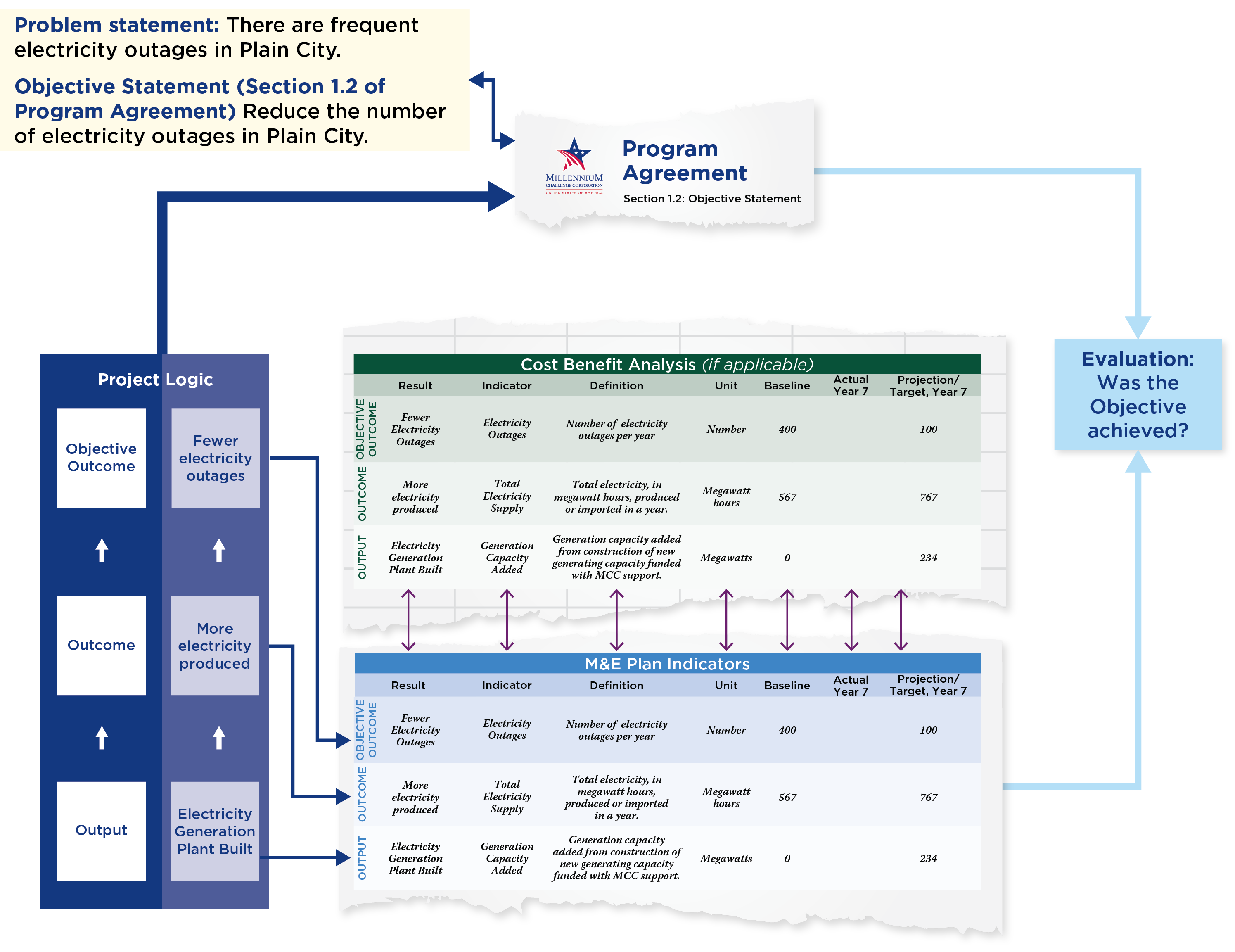

The results framework from Step 1 - filled in with sample details of a pretend project.

You can think of the above framework like an empty template that is filled in as a project is developed. Project designers document their plans and vision for the project in the framework. The extent that the framework can be filled in is different for each project. In the revised policy, MCC establishes the “Results Definition Standard” — the minimum degree to which a framework should be filled-in to meet MCC’s M&E Policy criteria at the time of the investment decision. Meeting this standard is necessary for high-quality M&E. This standard also gives MCC senior leadership a clear picture of the results they can expect if project funding is approved.

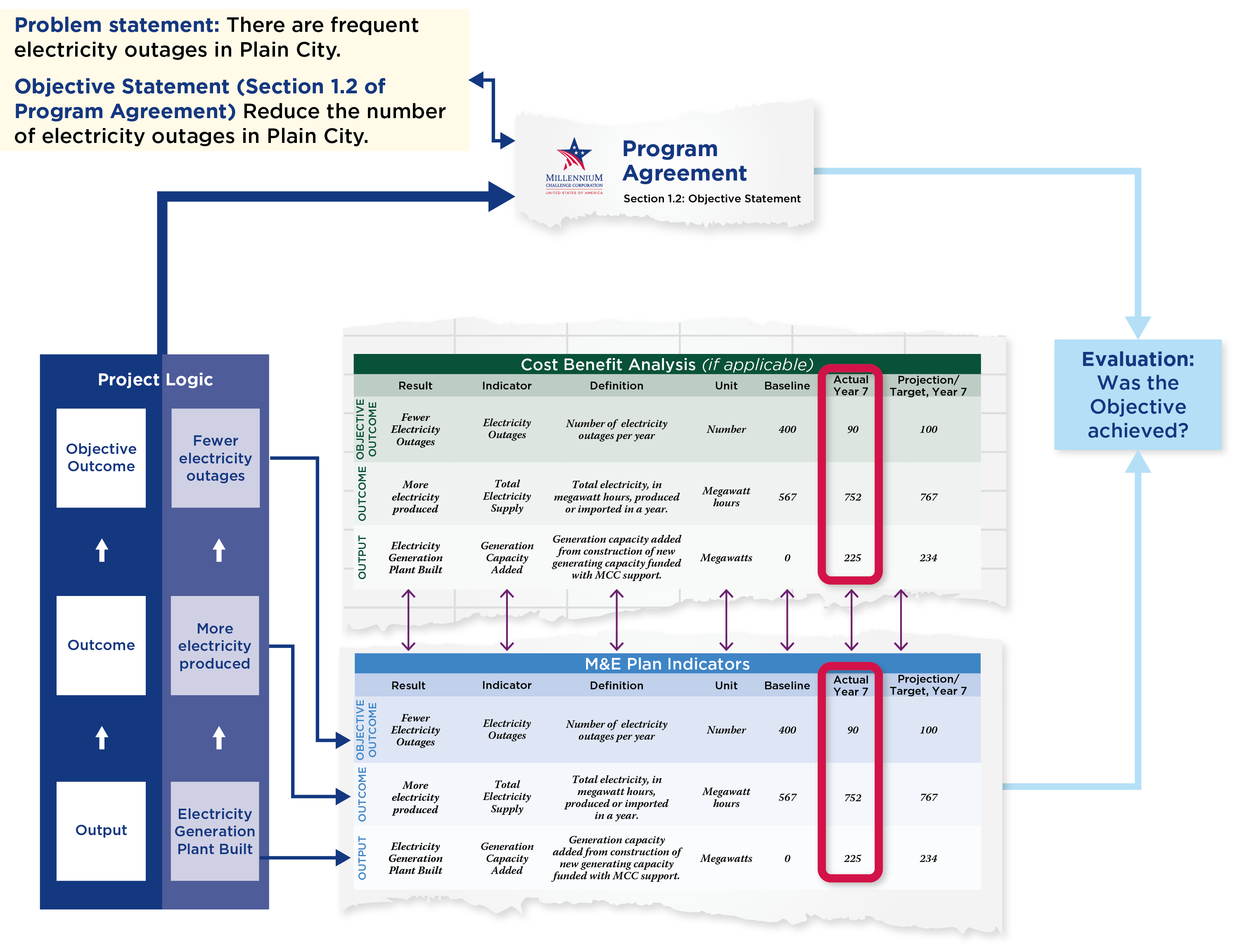

The results framework from Step 2 - filled in with the “actuals” measured.

MCC strives to apply lessons learned to future program design and implementation.

The goal of high-quality M&E is to facilitate real learning. With this policy revision, we hope to facilitate learning from each evaluation and carry these lessons forward. For example, as a result of our new focus on clearly defined project objectives, we will be able to produce clearer and shorter evaluation reports that are easy for project teams to digest and translate into practical lessons.

At MCC, we are committed to accountability, learning, and transparency. This means true reflection on whether or not we achieved our project objective, and where our project logic held up and where it fell apart. We will use this knowledge to inform future MCC programming and share with the public. By always seeking more accurate and cohesive approaches to understanding what our projects achieve, we can continue to reinforce a virtuous circle of planning, implementation, measurement, and learning at MCC.